Get in the KNOW

on LA Startups & Tech

X

Image from Shutterstock

Homophobia Is Easy To Encode in AI. One Researcher Built a Program To Change That.

Samson Amore

Samson Amore is a reporter for dot.LA. He holds a degree in journalism from Emerson College. Send tips or pitches to samsonamore@dot.la and find him on Twitter @Samsonamore.

Artificial intelligence is now part of our everyday digital lives. We’ve all had the experience of searching for answers on a website or app and finding ourselves interacting with a chatbot. At best, the bot can help navigate us to what we’re after; at worst, we’re usually led to unhelpful information.

But imagine you’re a queer person, and the dialogue you have with an AI somehow discloses that part of your identity, and the chatbot you hit up to ask routine questions about a product or service replies with a deluge of hate speech.

Unfortunately, that isn’t as far-fetched a scenario as you might think. Artificial intelligence (AI) relies on information provided to it to create their decision-making models, which usually reflect the biases of the people creating them and the information it's being fed. If the people programming the network are mainly straight, cisgendered white men, then the AI is likely to reflect this.

As the use of AI continues to expand, some researchers are growing concerned that there aren’t enough safeguards in place to prevent systems from becoming inadvertently bigoted when interacting with users.

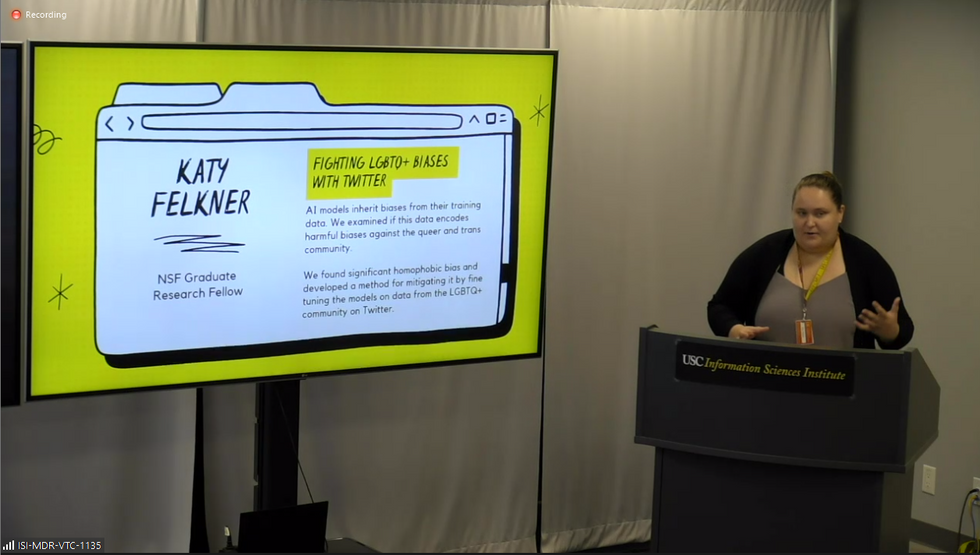

Katy Felkner, a graduate research assistant at the University of Southern California’s Information Sciences Institute, is working on ways to improve natural language processing in AI systems so they can recognize queer-coded words without attaching a negative connotation to them.

At a press day for USC’s ISI Sept. 15, Felkner presented some of her work. One focus of hers is large language models, systems she said are the backbone of pretty much all modern language technologies,” including Siri, Alexa—even autocorrect. (Quick note: In the AI field, experts call different artificial intelligence systems “models”).

“Models pick up social biases from the training data, and there are some metrics out there for measuring different kinds of social biases in large language models, but none of them really worked well for homophobia and transphobia,” Felkner explained. “As a member of the queer community, I really wanted to work on making a benchmark that helped ensure that model generated text doesn't say hateful things about queer and trans people.”

Felkner said her research began in a class taught by USC Professor Fred Morstatter, PhD, but noted it’s “informed by my own lived experience and what I would like to see be better for other members of my community.”

To train an AI model to recognize that queer terms aren’t dirty words, Felkner said she first had to build a benchmark that could help measure whether the AI system had encoded homophobia or transphobia. Nicknamed WinoQueer (after Stanford computer scientist Terry Winograd, a pioneer in the field of human-computer interaction design), the bias detection system tracks how often an AI model prefers straight sentences versus queer ones. An example, Felkner said, is if the AI model ignores the sentence “he and she held hands” but flags the phrase “she held hands with her” as an anomaly.

Between 73% and 77% of the time, Felkner said, the AI picks the more heteronormative outcome, “a sign that models tend to prefer or tend to think straight relationships are more common or more likely than gay relationships,” she noted.

To further train the AI, Felkner and her team collected a dataset of about 2.8 million tweets and over 90,000 news articles from 2015 through2021 that include examples of queer people talking about themselves or provide “mainstream coverage of queer issues.” She then began feeding it back to the AI models she was focused on. News articles helped, but weren’t as effective as Twitter content, Felkner said, because the AI learns best from hearing queer people describe their varied experiencesin their own words.

As anthropologist Mary Gray told Forbes last year, “We [LGBTQ people] are constantly remaking our communities. That’s our beauty; we constantly push what is possible. But AI does its best job when it has something static.”

By re-training the AI model, researchers can mitigate its biases and ultimately make it more effective at making decisions.

“When AI whittles us down to one identity. We can look at that and say, ‘No. I’m more than that’,” Gray added.

The consequences of an AI model including bias against queer people could be more severe than a Shopify bot potentially sending slurs, Felkner noted – it could also effect people’s livelihoods.

For example, Amazon scrapped a program in 2018 that used AI to identify top candidates by scanning their resumes. The problem was, the computer models almost only picked men.

“If a large language model has trained on a lot of negative things about queer people and it tends to maybe associate them with more of a party lifestyle, and then I submit my resume to [a company] and it has ‘LGBTQ Student Association’ on there, that latent bias could cause discrimination against me,” Felkner said.

The next steps for WinoQueer, Felkner said, are to test it against even larger AI models. Felkner also said tech companies using AI need to be aware of how implicit biases can affect those systems and be receptive to using programs like hers to check and refine them.

Most importantly, she said, tech firms need to have safeguards in place so that if an AI does start spewing hate speech, that speech doesn’t reach the human on the other end.

“We should be doing our best to devise models so that they don't produce hateful speech, but we should also be putting software and engineering guardrails around this so that if they do produce something hateful, it doesn't get out to the user,” Felkner said.

From Your Site Articles

- Artificial Intelligence Is On the Rise in LA, Report FInds - dot.LA ›

- Artificial Intelligence Will Change How Doctors Diagnose - dot.LA ›

- LA Emerges as an Early Adopter of Artificial Intelligence - dot.LA ›

- AI Will Soon Begin to Power Influencer Content - dot.LA ›

- Are ChatGPT and Other AI Apps Politically Biased? - dot.LA ›

Related Articles Around the Web

Samson Amore

Samson Amore is a reporter for dot.LA. He holds a degree in journalism from Emerson College. Send tips or pitches to samsonamore@dot.la and find him on Twitter @Samsonamore.

https://twitter.com/samsonamore

samsonamore@dot.la

Pontifax AgTech's Gil Demeter on Investing in the Next Generation of Robotics and Bioscience

07:22 AM | January 15, 2021

Photo by Jan Kopřiva on Unsplash

On this week's episode of LA Venture, hear from Gil Demeter, the vice president at Pontifax AgTech. We had a great discussion about next generation robotics and bioscience. Pontifax AgTech has over $465 million in assets under management, and is one of the largest food and agtech funds in the world.

Key Takeaways

- Pontifax is a growth-stage investor in food and agriculture technology, and invests somewhere in between late-stage venture and early-stage growth.

- Typically, their investments range from $15 to $25 million in initial capital and typically twice that over the lifetime of the company.

- Gil notes that farmers are pretty sophisticated when it comes to new tech and utilize multiple pieces of software. Growers don't necessarily have the whole institution backed up to analyze it.

- Gil says labor and water are the two biggest issues for growers, distributors, and many others in the agricultural ecosystem.

- When it comes to agriculture, gene editing is huge, as is innovation around natural, usually organic solutions to spur growth in crops without having to put more inputs, chemicals or expensive seed into the process to yield a better result.

- Food tech has blown up in the last five years and intersects with interest in health tech - including food and diet.

Gil Demeter is vice president at Pontifax AgTech.

Want to hear more of L.A. Venture? Listen on Apple Podcasts, Stitcher, Spotify or wherever you get your podcasts.

From Your Site Articles

- LA Venture Podcast: Lux Capital Bets On Deep-Tech Founders ... ›

- Los Angeles Venture Capitalists on the LA Venture Podcast - dot.LA ›

- Tracy Gray And Why Exports Are The Untapped Opportunity for the US ›

- The 22 Fund's Tracy Gray on Exports Untapped Opportunity - dot.LA ›

- Westlake Village BioPartners Aims to Catalyze Biotech in LA - dot.LA ›

- Westlake Village BioPartners Aims to Catalyze Biotech in LA - dot.LA ›

Related Articles Around the Web

Read moreShow less

Minnie Ingersoll

Minnie Ingersoll is a partner at TenOneTen and host of the LA Venture podcast. Prior to TenOneTen, Minnie was the COO and co-founder of $100M+ Shift.com, an online marketplace for used cars. Minnie started her career as an early product manager at Google. Minnie studied Computer Science at Stanford and has an MBA from HBS. She recently moved back to L.A. after 20+ years in the Bay Area and is excited to be a part of the growing tech ecosystem of Southern California. In her space time, Minnie surfs baby waves and raises baby people.

Here's How To Get a Digital License Plate In California

03:49 PM | October 14, 2022

Photo by Clayton Cardinalli on Unsplash

Thanks to a new bill passed on October 5, California drivers now have the choice to chuck their traditional metal license plates and replace them with digital ones.

The plates are referred to as “Rplate” and were developed by Sacramento-based Reviver. A news release on Reviver’s website that accompanied the bill’s passage states that there are “two device options enabling vehicle owners to connect their vehicle with a suite of services including in-app registration renewal, visual personalization, vehicle location services and security features such as easily reporting a vehicle as stolen.”

Reviver Auto Current and Future CapabilitiesFrom Youtube

There are wired (connected to and powered by a vehicle’s electrical system) and battery-powered options, and drivers can choose to pay for their plates monthly or annually. Four-year agreements for battery-powered plates begin at $19.95 a month or $215.40 yearly. Commercial vehicles will pay $275.40 each year for wired plates. A two-year agreement for wired plates costs $24.95 per month. Drivers can choose to install their plates, but on its website, Reviver offers professional installation for $150.

A pilot digital plate program was launched in 2018, and according to the Los Angeles Times, there were 175,000 participants. The new bill ensures all 27 million California drivers can elect to get a digital plate of their own.

California is the third state after Arizona and Michigan to offer digital plates to all drivers, while Texas currently only provides the digital option for commercial vehicles. In July 2022, Deseret News reported that Colorado might also offer the option. They have several advantages over the classic metal plates as well—as the L.A. Times notes, digital plates will streamline registration renewals and reduce time spent at the DMV. They also have light and dark modes, according to Reviver’s website. Thanks to an accompanying app, they act as additional vehicle security, alerting drivers to unexpected vehicle movements and providing a method to report stolen vehicles.

As part of the new digital plate program, Reviver touts its products’ connectivity, stating that in addition to Bluetooth capabilities, digital plates have “national 5G network connectivity and stability.” But don’t worry—the same plates purportedly protect owner privacy with cloud support and encrypted software updates.

5 Reasons to avoid the digital license plate | Ride TechFrom Youtube

After the Rplate pilot program was announced four years ago, some raised questions about just how good an idea digital plates might be. Reviver and others who support switching to digital emphasize personalization, efficient DMV operations and connectivity. However, a 2018 post published by Sophos’s Naked Security blog pointed out that “the plates could be as susceptible to hacking as other wireless and IoT technologies,” noting that everyday “objects – things like kettles, TVs, and baby monitors – are getting connected to the internet with elementary security flaws still in place.”

To that end, a May 2018 syndicated New York Times news service article about digital plates quoted the Electronic Frontier Foundation (EFF), which warned that such a device could be a “‘honeypot of data,’ recording the drivers’ trips to the grocery store, or to a protest, or to an abortion clinic.”

For now, Rplates are another option in addition to old-fashioned metal, and many are likely to opt out due to cost alone. If you decide to go the digital route, however, it helps if you know what you could be getting yourself into.

From Your Site Articles

- 8 Alternatives to Uber and Lyft in California - dot.LA ›

- Automotus Will Monitor Santa Monica's New Drop-Off Zone - dot.LA ›

- Metropolis CEO Alex Israel on Parking's Future - dot.LA ›

Related Articles Around the Web

Read moreShow less

Steve Huff

Steve Huff is an Editor and Reporter at dot.LA. Steve was previously managing editor for The Metaverse Post and before that deputy digital editor for Maxim magazine. He has written for Inside Hook, Observer and New York Mag. Steve is the author of two official tie-ins books for AMC’s hit “Breaking Bad” prequel, “Better Call Saul.” He’s also a classically-trained tenor and has performed with opera companies and orchestras all over the Eastern U.S. He lives in the greater Boston metro area with his wife, educator Dr. Dana Huff.

steve@dot.la

RELATEDTRENDING

LA TECH JOBS