Get in the KNOW

on LA Startups & Tech

X

Image from Shutterstock

Homophobia Is Easy To Encode in AI. One Researcher Built a Program To Change That.

Samson Amore

Samson Amore is a reporter for dot.LA. He holds a degree in journalism from Emerson College. Send tips or pitches to samsonamore@dot.la and find him on Twitter @Samsonamore.

Artificial intelligence is now part of our everyday digital lives. We’ve all had the experience of searching for answers on a website or app and finding ourselves interacting with a chatbot. At best, the bot can help navigate us to what we’re after; at worst, we’re usually led to unhelpful information.

But imagine you’re a queer person, and the dialogue you have with an AI somehow discloses that part of your identity, and the chatbot you hit up to ask routine questions about a product or service replies with a deluge of hate speech.

Unfortunately, that isn’t as far-fetched a scenario as you might think. Artificial intelligence (AI) relies on information provided to it to create their decision-making models, which usually reflect the biases of the people creating them and the information it's being fed. If the people programming the network are mainly straight, cisgendered white men, then the AI is likely to reflect this.

As the use of AI continues to expand, some researchers are growing concerned that there aren’t enough safeguards in place to prevent systems from becoming inadvertently bigoted when interacting with users.

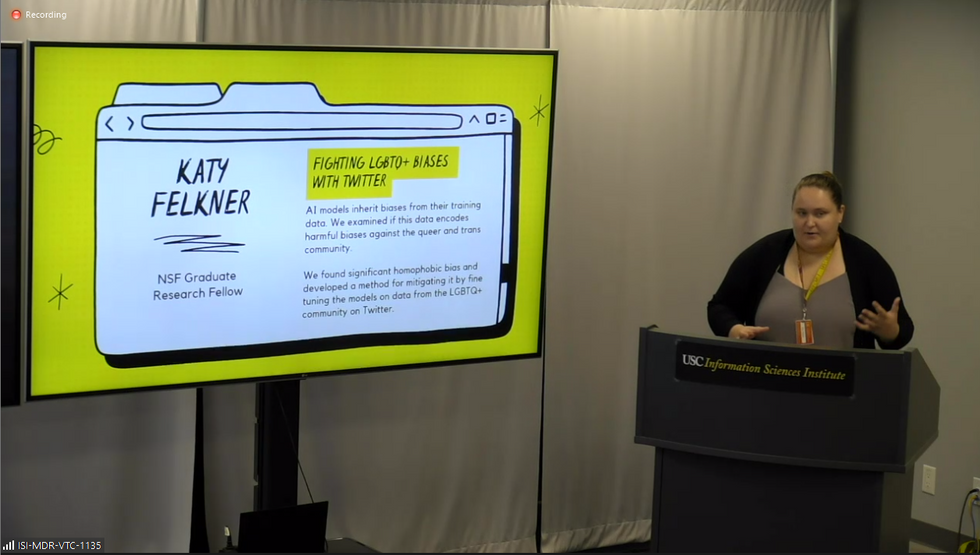

Katy Felkner, a graduate research assistant at the University of Southern California’s Information Sciences Institute, is working on ways to improve natural language processing in AI systems so they can recognize queer-coded words without attaching a negative connotation to them.

At a press day for USC’s ISI Sept. 15, Felkner presented some of her work. One focus of hers is large language models, systems she said are the backbone of pretty much all modern language technologies,” including Siri, Alexa—even autocorrect. (Quick note: In the AI field, experts call different artificial intelligence systems “models”).

“Models pick up social biases from the training data, and there are some metrics out there for measuring different kinds of social biases in large language models, but none of them really worked well for homophobia and transphobia,” Felkner explained. “As a member of the queer community, I really wanted to work on making a benchmark that helped ensure that model generated text doesn't say hateful things about queer and trans people.”

Felkner said her research began in a class taught by USC Professor Fred Morstatter, PhD, but noted it’s “informed by my own lived experience and what I would like to see be better for other members of my community.”

To train an AI model to recognize that queer terms aren’t dirty words, Felkner said she first had to build a benchmark that could help measure whether the AI system had encoded homophobia or transphobia. Nicknamed WinoQueer (after Stanford computer scientist Terry Winograd, a pioneer in the field of human-computer interaction design), the bias detection system tracks how often an AI model prefers straight sentences versus queer ones. An example, Felkner said, is if the AI model ignores the sentence “he and she held hands” but flags the phrase “she held hands with her” as an anomaly.

Between 73% and 77% of the time, Felkner said, the AI picks the more heteronormative outcome, “a sign that models tend to prefer or tend to think straight relationships are more common or more likely than gay relationships,” she noted.

To further train the AI, Felkner and her team collected a dataset of about 2.8 million tweets and over 90,000 news articles from 2015 through2021 that include examples of queer people talking about themselves or provide “mainstream coverage of queer issues.” She then began feeding it back to the AI models she was focused on. News articles helped, but weren’t as effective as Twitter content, Felkner said, because the AI learns best from hearing queer people describe their varied experiencesin their own words.

As anthropologist Mary Gray told Forbes last year, “We [LGBTQ people] are constantly remaking our communities. That’s our beauty; we constantly push what is possible. But AI does its best job when it has something static.”

By re-training the AI model, researchers can mitigate its biases and ultimately make it more effective at making decisions.

“When AI whittles us down to one identity. We can look at that and say, ‘No. I’m more than that’,” Gray added.

The consequences of an AI model including bias against queer people could be more severe than a Shopify bot potentially sending slurs, Felkner noted – it could also effect people’s livelihoods.

For example, Amazon scrapped a program in 2018 that used AI to identify top candidates by scanning their resumes. The problem was, the computer models almost only picked men.

“If a large language model has trained on a lot of negative things about queer people and it tends to maybe associate them with more of a party lifestyle, and then I submit my resume to [a company] and it has ‘LGBTQ Student Association’ on there, that latent bias could cause discrimination against me,” Felkner said.

The next steps for WinoQueer, Felkner said, are to test it against even larger AI models. Felkner also said tech companies using AI need to be aware of how implicit biases can affect those systems and be receptive to using programs like hers to check and refine them.

Most importantly, she said, tech firms need to have safeguards in place so that if an AI does start spewing hate speech, that speech doesn’t reach the human on the other end.

“We should be doing our best to devise models so that they don't produce hateful speech, but we should also be putting software and engineering guardrails around this so that if they do produce something hateful, it doesn't get out to the user,” Felkner said.

From Your Site Articles

- Artificial Intelligence Is On the Rise in LA, Report FInds - dot.LA ›

- Artificial Intelligence Will Change How Doctors Diagnose - dot.LA ›

- LA Emerges as an Early Adopter of Artificial Intelligence - dot.LA ›

- AI Will Soon Begin to Power Influencer Content - dot.LA ›

- Are ChatGPT and Other AI Apps Politically Biased? - dot.LA ›

Related Articles Around the Web

Samson Amore

Samson Amore is a reporter for dot.LA. He holds a degree in journalism from Emerson College. Send tips or pitches to samsonamore@dot.la and find him on Twitter @Samsonamore.

https://twitter.com/samsonamore

samsonamore@dot.la

🔦 Spotlight

LA-based Riot Games, celebrated for its popular League of Legends game and the award-winning animated series Arcane, has announced a significant workforce reduction, laying off approximately 11% of its global staff, totaling 530 employees. While CEO Dylan Jadeja described this difficult decision as a necessity, there is a recognition that the broader video game industry has faced challenges with over 10,000 global layoffs since January 2023.

Acknowledging the need for strategic adjustments due to rising costs and limited room for experimentation, Riot Games has decided to shutter its Riot Forge publishing arm, which collaborated with external studios for smaller-scale games. Despite these changes, there is optimism in the commitment to providing affected employees with at least six months of severance pay and eligibility for cash bonuses. Jadeja expressed the company's dedication to its workforce, emphasizing that this decision aims to ensure the long-term sustainability and creativity of Riot Games.

For the broader gaming industry in Los Angeles, this development may indicate a need for companies to adapt to evolving market dynamics. While Riot Games remains an indomitable player with its popular titles like League of Legends, Valorant, the industry as a whole may be reassessing strategies to ensure sustainability and innovation. The workforce reduction may prompt other gaming companies in the region to evaluate their own structures and business models in response to the changing landscape, with a focus on balancing growth, cost management, and creative exploration.

🤝 Venture Deals

Just Announced

- Captura, a startup focused on open-ocean carbon capture, raised $21.5M Series A expansion round led by Future Planet Captial. - learn more

- Acre Venture Partners participated in a $10M Series A for Farm-ng, a startup that has developed a robot that uses AI to help small- and mid-size farms with repetitive tasks like weeding. - learn more

- M13 Ventures participated in a $11.1M Seed Round for Norm Ai, a startup that is using AI to automate some regulatory tasks for chief compliance officers. - learn more

Actively Raising

- ReelCall, Inc., an entertainment technology company focused on powerful apps and platforms that help build and maintain the professional network of connections vital to career growth, is raising a $850K Pre-Seed Round. - learn more

- CZero, a startup building software to decarbonize logistics for logistics businesses and goods business through a vetted marketplace and optimization software. - learn more

- Couri, a technology startup addressing last-mile delivery issues, is raising a $450K Pre-Seed Round at a $2.2M post money valuation. - learn more

- Sweetie, a marketplace to help people plan date nights, is raising a $1.5M Pre Seed Round. - learn more

- StartupStarter, an investment platform that provides real-time data and analytics on startups, is raising an $850K Angel Round. - learn more

If you’re a founder raising money in Los Angeles, give us a shout, and we’d love to include you in the newsletter!

✨ Featured Partner

The WeAreLATech 'Experience' Club is for individuals working in LA tech; you can work at a startup or seasoned tech company.

We're engineers, investors, founders, designers, growth marketers, app developers, product managers and more... who do in person activities together 1-2 times a month. The concept is based on the saying 'More business gets done at the bar than in a boardroom.' That said, there's no panels or mixers. We have enough of those.

WeAreLATech was created by me, Espree Devora, back in 2012. I consider myself to be "an artist of human connection". Each event is my piece of art.

Activities include horseback rides, clay pigeon shooting, hiking, Price is Right gameshow, rooftop cocktails, surfing, escape rooms, food tastings, go karts, luxury beach picnics, drone flying, and the list goes on. We've done 100s of Experiences. Some events are curated by industry and others by role. The goal is to save you time making quality connections. Maintaining a strong culture and keeping the gatherings small, connective and curated is top priority.

Interested in more details about the Club? Check it out here.

And to listen to the WeAreLATech Podcast, click here

📅 LA Tech Calendar

Wednesday, January 31st

- BioSync AI Mixer - Venture into the cutting-edge crossroads of AI and Biotech at the inaugural BioSync AI Mixer in Culver City! This is a brand-new initiative, a collaboration between AI LA, Bioscience LA, BitsinBio, and Nucleate.

- Climate Circuit’s Casual Meetup at Broxton Brewery - Come join Climate Circuit to talk shop on climate tech! All are welcome no matter if you're dipping your toe into this world or have been building in climate tech for a while.

Thursday, February 1st

- Music in the Era of AI- Listen to a conversation between some of the world’s leading artificial intelligence policymakers, scholars, and artists to discuss actions the entertainment industry can take to protect the rights of human creators.

Friday, Feb 2nd

- Pitch and Run LA: Friday Runs - Join Founders and VCs for Pitch and Run Fridays! 7:30am near 17th St & San Vincente for a ~3.5 mile loop at an easy-conversational pace. Pitch and Run is designed to help you connect with others on ideas, passions, and life while enjoying a casual run. They started with a focus on people in tech/startups, but are open to everyone.

📙 What We’re Reading

- Amazon MGM Studios Division Cuts Deal For Esports Content. - learn more

- Will the IPO Market Spring Back in 2024? The First Big Debut Offers Clues. - learn more

- MovieTok Meets Sundance As Creators Hit Park City. - learn more

Read moreShow less

Christian Hetrick

Christian Hetrick is dot.LA's Entertainment Tech Reporter. He was formerly a business reporter for the Philadelphia Inquirer and reported on New Jersey politics for the Observer and the Press of Atlantic City.

K-beauty Entrepreneur Alicia Yoon On Taking the Leap From Corporate Consultant to Starting Her Skincare Brand

05:00 AM | June 07, 2023

Alicia Yoon

On this episode of Behind Her Empire, Peach & Lily founder and CEO Alicia Yoon discusses her journey from being a corporate consultant to establishing her own skincare brand as well as the necessity of having an airtight business model to become successful.

Throughout her life, Yoon suffered from severe eczema and struggled to find effective skincare in the United States that had meaningful results on her sensitive skin. During her high school years in South Korea, she turned to K-beauty brands for help and found the ingredients in those products more suitable to her skin.

In 2012, she founded leading Korean skincare website Peach & Lily as a way to help others take control of their skin problems. Her positive experience with K-beauty formulations inspired her to bring these products to the United States, products with ingredients that were effective but still foreign to Western beauty brands.

Before starting her business, Yoon worked in the corporate sector as a consultant for The Boston Consulting Group and Accenture. Once she realized she wanted to start a business in beauty, she left her role to attend esthetician school in South Korea and study K-beauty alongside trained chemists. She said that passion is absolutely necessary when it comes to starting a business.

“Your head and your heart have to feel 100% passionate and okay with it,” Yoon said. “If you don't love the thing that you're doing, it's really hard to keep waking up and really putting 100% into it and it does take 100% of you.”

Aside from having passion, Yoon believes that entrepreneurs need to take a step back before starting a business and make sure that their business model is completely ironed out so they can achieve long-term success. She said that founders should reevaluate their business models especially “if the cost of goods is just too high to maintain a profitable business.”

She learned the importance of the business model through her first startup, a Korean fashion import firm. Despite winning awards and selling out trunk shows, the business didn’t have much potential for growth, she said.

“There were issues with the business model. It would have been okay as a small cult business,” she said. “While those businesses are great, that's just not what I wanted. I really wanted to go all in with a business where I could really scale it.”

Yoon said that this experience and her time at Harvard Business School gave her the confidence to start Peach & Lily. Being in business school during the financial crisis opened the door to several networking opportunities and allowed her to have open conversations with other founders about their journeys, about what works and what doesn’t, and some of the challenges they had to overcome.

“I think the existential moments lead to more fuel, passion and action,” she said. “It does get hard because there are just going to be moments where you have to wear like 17 different hats.”

Because entrepreneurs play so many different roles in their business, Yoon thinks it can be difficult for them to see the impact that their company can have on its customers. Sometimes, this can make it hard to stay motivated.

Yoon to recharges herself by calling her support group: her friends and family whom she calls her “personal cheerleaders.” When she is having doubts about her work, she says they help remind her of her goals and why she started her business.

Customer reviews also help her stay motivated. Peach & Lily has an email listserv that allows customers to send reviews and comments to the company. Yoon feels the power of her work when she reads reviews that state how her products have changed her customers’ skins or how amazing her customers feel after using Peach & Lily products.

In a little more than ten years, Peach & Lily is on track to become the number five skincare brand at Ulta Beauty.

“I would literally turn on amped up music and I would get so emotional being like, wow, we're actually helping people,” Yoon said. “This is why I'm doing this.”

dot.LA Reporter Decerry Donato contributed to this post.

This podcast is produced by Behind Her Empire. The views and opinions expressed in the show are those of the speakers and do not necessarily reflect those of dot.LA or its newsroom.

Hear more of the Behind Her Empire podcast. Subscribe on Stitcher, Apple Podcasts, Spotify, iHeart Radio or wherever you get your podcasts.

From Your Site Articles

- Behind Her Empire: Salt & Straw Founder Kim Malek On Overcoming The Fear of Starting a Business ›

- Behind Her Empire: ComplYant Founder and CEO Shiloh Johnson on Helping Small Businesses ›

- Behind Her Empire: Anastasia Beverly Hills’ Founder on the Eyebrows That Launched an Empire ›

Related Articles Around the Web

Read moreShow less

Decerry Donato

Decerry Donato is a reporter at dot.LA. Prior to that, she was an editorial fellow at the company. Decerry received her bachelor's degree in literary journalism from the University of California, Irvine. She continues to write stories to inform the community about issues or events that take place in the L.A. area. On the weekends, she can be found hiking in the Angeles National forest or sifting through racks at your local thrift store.

RELATEDTRENDING

LA TECH JOBS