After Dozens of Wrongful Arrests, a New Bill Is Cracking Down on Facial Recognition Tech for Law Enforcement

In 2020, Robert Julian-Borchak Williams was wrongfully arrested due to an algorithm used by the Michigan State Police who matched his driver’s license with a blurry surveillance photo. A few weeks later, Michael Oliver was arrested and charged with a felony by the Detroit police department after he was wrongfully identified by facial recognition technology (FRT).

In response, Congressman Ted W. Lieu of Los Angeles County and other House Democrats introduced the Facial Recognition Act of 2022 last week, which would place limitations and prohibitions on law enforcement use of FRT.

This comes at a time when records obtained by the Los Angeles Times show that the Los Angeles Police Department (LAPD) has used FRT at least 29,817 times since 2009. According to a study done by the National Institute of Standards and Technology (NIST), the algorithms used in FRT falsely identified African-American and Asian faces 10 to 100 times more than white faces.

“The United States desperately lacks a national privacy law or any sort of meaningful restrictions on how our faces and biometrics that we leave behind through our daily interactions can be used by private companies or by law enforcement,” Courtney Radsch, UCLA fellow at the Institute for Technology Law and Policy, says.

The new bill, however, would urge law enforcement to obtain a judge-authorized warrant before using facial recognition on any investigation. In order to obtain a warrant, a police officer must submit a written affidavit to the judge. The idea being that adding an extra step will dissuade LAPD and other officials from relying so heavily on FRT and prevent the arrest of misidentified, innocent individuals.

Reliance on FRT reached a high point in the immediate aftermath of the January 6th Capital riots. The New York Times reported that Clearview AI, a leading facial recognition firm, saw a 26% jump in usage from law enforcement agencies on January 7th. In August 2021, the Government Accountability Office reported 20 out of 42 federal agencies surveyed used FRT as part of their law enforcement efforts.

“Given that you're going to see ongoing protests (due to Roe v. Wade and other ongoing issues),” says Radsch, “I think the ability to pick out protesters in a crowd combined with data from social media profiling and other sort of biometric and public monitoring is really disturbing.”

If the bill passes, law enforcement agencies are also prohibited from using FRT at protests and bans them from using the technology alongside body, dashboard and aircraft camera footage.

On the federal level, there are no laws in place that can prevent the abuse of FRT. U.S. Deputy Director of Security and Surveillance Jake Laperruque of the Center for Democracy and Technology calls it a “wild west.”

“A lot of folks see on TV and imagine it's kind of like a sci-fi attack, but it's very much a part of modern policing and government surveillance, and unfortunately, one that right now, has very little safeguards around,” he adds.

So far, there are no states that require a warrant to use facial recognition. But there are over a dozen states and cities that have rules and guidelines in place that limit law enforcement from using the technology at their disposal.

San Francisco and Oakland have banned government agencies from using facial recognition due to bias concerns. In 2020, Oregon was the first state to prohibit the use of facial recognition. And Massachusetts requires a court order for scans, but rather than probable cause, the government only needs to show that identifying the individual is relevant to an investigation.

To that end, Laperruque, who has been involved on the legislative side for over a decade, says it isn’t enough. Rep. Ted Lieu agrees, telling the LA Times that while more than a dozen states have enacted regulations around the use of FRT, the piecemeal approach doesn't keep all citizens safe from misidentification. Adding that, “This bill creates baseline protections for all Americans while still enabling state and local jurisdictions to move forward with bans and moratoriums.

- Anduril Industries is Building Border Surveillance Tech - dot.LA ›

- Ring Is Making Extra Security Mandatory After Hacks, Lawsuit ›

- Automotus Will Monitor Santa Monica's New Drop-Off Zone - dot.LA ›

- Biden’s AI Bill of Rights Falls Flat with LA's AI Community - dot.LA ›

- The LAPD Spends Millions On Spy Tech — Here's Why - dot.LA ›

- Are ChatGPT and Other AI Apps Politically Biased? - dot.LA ›

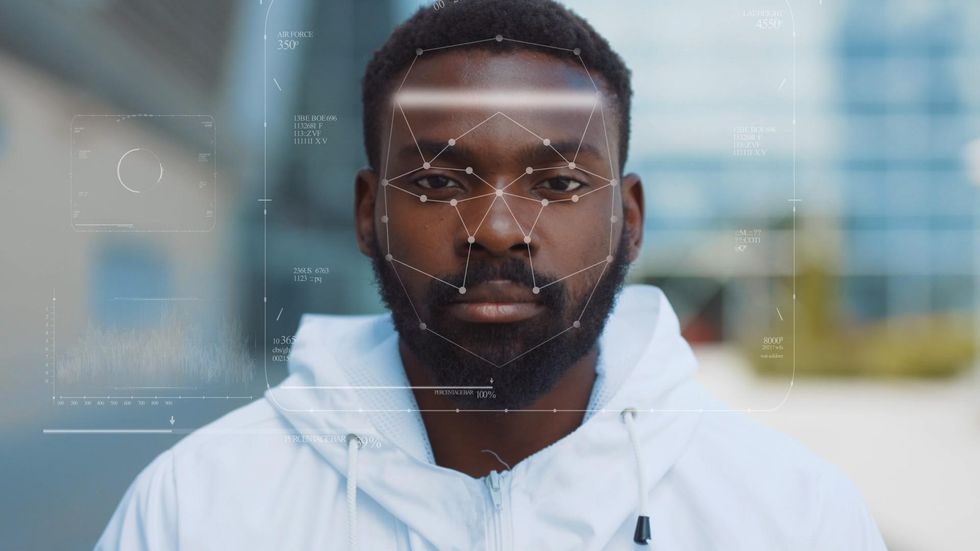

The technology employed by the LAPD ignores pigmentation, according to an officer who oversees it, instead digitally mapping the face by looking at things like the distance between the eyes, or the distance from the nose to mouth.Shutterstock

The technology employed by the LAPD ignores pigmentation, according to an officer who oversees it, instead digitally mapping the face by looking at things like the distance between the eyes, or the distance from the nose to mouth.Shutterstock