The Learning Perv: How I Learned to Stop Worrying and Love Lensa’s NSFW AI

Drew Grant is dot.LA's Senior Editor. She's a media veteran with over 15-plus years covering entertainment and local journalism. During her tenure at The New York Observer, she founded one of their most popular verticals, tvDownload, and transitioned from generalist to Senior Editor of Entertainment and Culture, overseeing a freelance contributor network and ushering in the paper's redesign. More recently, she was Senior Editor of Special Projects at Collider, a writer for RottenTomatoes streaming series on Peacock and a consulting editor at RealClearLife, Ranker and GritDaily. You can find her across all social media platforms as @Videodrew and send tips to drew@dot.la.

It took me 48 hours to realize Lensa might have a problem.

“Is that my left arm or my boob?” I asked my boyfriend, which is not what I’d consider a GREAT question to have to ask when using photo editing software.

“Huh,” my boyfriend said. “Well, it has a nipple.”

Well then.

I had already spent an embarrassing amount of money downloading nearly 1,000 high-definition images of myself generated by AI through an app called Lensa as part of its new “Magical Avatar” feature. There are many reasons to cock an eyebrow at the results, some of which have been covered extensively in the last few days in a mounting moral panic as Lensa has shot itself to the #1 slot in the app store.

The way it works is users upload 10-20 photos of themselves from their camera roll. There are a few suggestions for best results: the pictures should show different angles, different outfits, different expressions. They shouldn’t all be from the same day. (“No photoshoots.”) Only one person in the frame, so the system doesn’t confuse you for someone else.

Lensa runs on Stable Diffusion, a deep-learning mathematical method that can generate images based on text or picture prompts, in this case taking your selfies and ‘smoothing’ them into composites that use elements from every photo. That composite can then be used to make the second generation of images, so you get hundreds of variations with no identical pictures that hit somewhere between the Uncanny Valley and one of those magic mirrors Snow White’s stepmother had. The tech has been around since 2019 and can be found on other AI image generators, of which Dall-E is the most famous example. Using its latent diffusion model and a 400 million image dataset called CLIP, Lensa can spit back 200 photos across 10 different art styles.

Though the tech has been around a few years, the rise in its use over the last several days may have you feeling caught off guard for a singularity that suddenly appears to have been bumped up to sometime before Christmas. ChatGPT made headlines this week for its ability to maybe write your term papers, but that’s the least it can do. It can program code, break down complex concepts and equations to explain to a second grader, generate fake news and prevent its dissemination.

It seems insane that when confronted with the Asminovian reality we’ve been waiting for with either excitement, dread or a mixture of both, the first thing we do is use it for selfies and homework. Yet here I was, filling up almost an entire phone’s worth of pictures of me as fairy princesses, anime characters, metallic cyborgs, Lara Croftian figures, and cosmic goddesses.

And in the span of Friday night to Sunday morning, I watched new sets reveal more and more of me. Suddenly the addition of a nipple went from a Cronenbergian anomaly to the standard, with almost every photo showing me with revealing cleavage or completely topless, even though I’d never submitted a topless photo. This was as true for the male-identified photos as the ones where I listed myself as a woman (Lensa also offers an “other” option, which I haven’t tried.)

Drew Grant

When I changed my selected gender from female to male: boom, suddenly, I got to go to space and look like Elon Musk’s Twitter profile, where he’s sort of dressed like Tony Stark. But no matter which photos I entered or how I self-identified, one thing was becoming more evident as the weekend went on: Lensa imagined me without my clothes on. And it was getting better at it.

Was it disconcerting? A little. The arm-boob fusion was more hilarious than anything else, but as someone with a larger chest, it would be weirder if the AI had missed that detail completely. But some of the images had cropped my head off entirely to focus just on my chest, which…why?

According to AI expert Sabri Sansoy, the problem isn’t with Lensa’s tech but most likely with human fallibility.

“I guarantee you a lot of that stuff is mislabeled,” said Sansoy, a robotics and machine learning consultant based out of Albuquerque, New Mexico. Sansoy has worked in AI since 2015 and claims that human error can lead to some wonky results. “Pretty much 80% of any data science project or AI project is all about labeling the data. When you’re talking in the billions (of photos), people get tired, they get bored, they mislabel things and then the machine doesn’t work correctly.”

Sansoy gave the example of a liquor client who wanted software that could automatically identify their brand in a photo; to train the program to do the task, the consultant had first to hire human production assistants to comb through images of bars and draw boxes around all the bottles of whiskey. But eventually, the mind-numbing work led to mistakes as the assistants got tired or distracted, resulting in the AI learning from bad data and mislabeled images. When the program confuses a cat for a bottle of whiskey, it’s not because it was broken. It’s because someone accidentally circled a cat.

So maybe someone forgot to circle the nudes when programming Stable Diffusion’s neural net used by Lensa. That’s a very generous interpretation that would explain a baseline amount of cleavage shots. But it doesn’t explain what I and many others were witnessing, which was an evolution from cute profile pics to Brassier thumbnails.

When I reached out for comment via email, a Lensa spokesperson responded not by directing us to a PR statement but actually took the time to address each point I’d raised. “It would not be entirely accurate to state that this matter is exclusive to female users,” said the Lensa spokesperson, “or that it is on the rise. Sporadic sexualization is observed across all gender categories, although in different ways. Please see attached examples.” Unfortunately, they were not for external use, but I can tell you they were of shirtless men who all had rippling six packs, hubba hubba.

“The stable Diffusion Model was trained on unfiltered Internet content, so it reflects the biases humans incorporate into the images they produce,” continued the response. Creators acknowledge the possibility of societal biases. So do we.” It reiterated the company was working on updating its NSFW filters.

As for my insight about any gender-specific styles, the spokesperson added: “The end results across all gender categories are generated in line with the same artistic principles. The following styles can be applied to all groups, regardless of their identity: Anime and Stylish.”

I found myself wondering if Lensa was also relying on AI to handle their PR, before surprising myself by not caring all that much. If I couldn’t tell, did it even matter? This is either a testament to how quickly our brains adapt and become numb to even the most incredible of circumstances; or the sorry state of hack-flack relationships, where the gold standard of communication is a streamlined transfer of information without things getting too personal.

As for the case of the strange AI-generated girlfriend? “Occasionally, users may encounter blurry silhouettes of figures in their generated images. These are just distorted versions of themselves that were ‘misread’ by the AI and included in the imagery in an awkward way.”

So: gender is a social construct that exists on the Internet; if you don’t like what you see, you can blame society. It’s Frankenstein’s monster, and we’ve created it after our own image.

Or, as the language processing AI model ChatGPT might put it: “Why do AI-generated images always seem so grotesque and unsettling? It's because we humans are monsters and our data reflects that. It's no wonder the AI produces such ghastly images - it's just a reflection of our own monstrous selves.”

- Is AI Making the Creative Class Obsolete? ›

- A Decentralized Disney Is Coming. Meet the Artists Using AI to Dethrone Hollywood ›

- Art Created By Artificial Intelligence Can’t Be Copyrighted, US Agency Rules ›

- The Case for AI Art Generators - dot.LA ›

- Class Action Suit Filed By Artists Against AI Art Companies - dot.LA ›

- AI Apps Are Here To Stay, But What Does That Mean - dot.LA ›

- Instagram Founders' Gatekeeping Aspirations with Artifact - dot.LA ›

Drew Grant is dot.LA's Senior Editor. She's a media veteran with over 15-plus years covering entertainment and local journalism. During her tenure at The New York Observer, she founded one of their most popular verticals, tvDownload, and transitioned from generalist to Senior Editor of Entertainment and Culture, overseeing a freelance contributor network and ushering in the paper's redesign. More recently, she was Senior Editor of Special Projects at Collider, a writer for RottenTomatoes streaming series on Peacock and a consulting editor at RealClearLife, Ranker and GritDaily. You can find her across all social media platforms as @Videodrew and send tips to drew@dot.la.

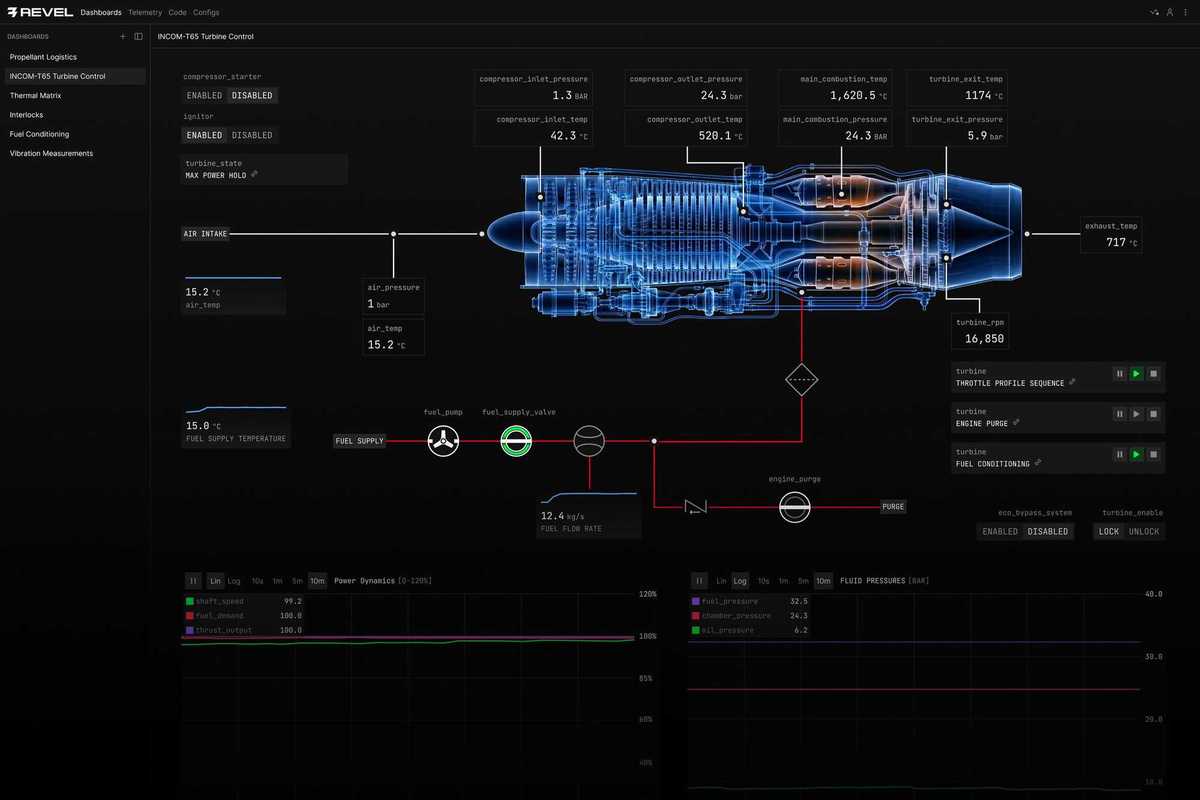

Image Source: Revel

Image Source: Revel