From Hype to Backlash: Is Public Opinion on AI Shifting?

Interest online around new AI companies, concepts and pitches remains as frothy as ever. A widely-shared thread over the weekend by Silicon Valley computer scientist Dr. Patrik Desai instructs readers to begin recording their elders, as he predicts (with 100% certainty!) that we’ll be able to map and preserve human consciousness “by the end of the year.” The tweets have over 10 million views in just three days. A new piece from the MIT Technology Review touts the ways AI apps will aid historians in understanding societies of the past. Just the other day, a Kuwait-based media company introduced an entirely-virtual news anchor named “Fedha.”

But in the aftermath of a much-discussed open letter in which some industry insiders suggested a pause on AI development, and as the Biden Administration considers potential new regulations around AI research, it appears that we’ve entered something of a backlash moment. Every new, excited thread extolling the futuristic wonders of AI image generators and chatbots is now accompanied by a dire warning about the dangerous potential consequences for the technology, or at least an acknowledgment that it’s not yet delivering on its full promise.

The Government Responds to Recent Developments in AI

To be clear, any actual movement by the federal government on AI regulation remains a good way off. President Biden’s Commerce Department this week put out a formal request for comments on potential new accountability measures, specifically around the question of whether new AI models should require certification before being released to the public. Commerce Dept. official Alan Davidson told the Wall Street Journal that the government’s chief concern was putting “guardrails in place to make sure [AI tools] are being used responsibly.” The comment-fielding period will last for 60 days, after which the agency will publish its advice to lawmakers about how to approach AI. Then and only then will lawmakers begin debating specific policies or approaches.

Biden’s Justice Department is also reportedly monitoring competition in the AI sector, while the Federal Trade Commission has also cautioned tech companies about making “false or unsubstantiated claims” about their AI products. Democratic Colorado Sen. Michael Bennet told WSJ that his main concerns centered around children’s safety, specifically mentioning reports about chatbots giving troubling or dangerous advice to users posing as children. When President Biden was asked by a reporter at the White House last we whether or not he thinks AI is dangerous, he responded “It remains to be seen. It could be.”

Chinese regulators are also mulling over new rules around AI development this week, following the release of chatbots and apps by large tech firms Baidu and Alibaba. China’s ruling party has already embraced AI for their own purposes, of course, using the technology for oversight and surveillance. The Atlantic reports that Chinese president Xi Jinping aims to use AI applications to create “an all-seeing digital system of social control.”

But beyond long-percolating actions at the highest levels of power, there’s also been a wider-scale, subtle but still noticeable shift in sentiment around some of these recent AI developments.

Mainstreaming of AI Raises More Employment Concerns

In some ways, these are the same old concerns that have been spoke about in tech circles for years going more mainstream. A recent editorial in USA Today, for example, picks up on the concerns about potential misuse of generative AI images to influence elections or steer public opinion, arguing that it’s only a matter of time before it becomes impossible to distinguish between AI-generated images and the real thing.

A report in today’s Washington Post centers on the sudden appearance of “fake pornography” generated by AI apps like Midjourney and Stable Diffusion, including a fictional woman named “Claudia” who is selling “nude photos” via direct message to users on Reddit. Impressive though the technology itself may be, the Post piece highlights a number of potential downsides. Though some users no doubt realize what they’re purchasing, others are being fooled into believing that Claudia is an actual human selling real photographs. Additionally, similar techniques could, of course, be employed to make artificial pornographic images that resemble real women, in a cutting-edge new form of sexual harassment.

Then, of course, there’s the potential competition for real workers in the adult industry, who could (at least theoretically) be put out of work by AI models or directors. OnlyFans model Zoey Sterling told the Post that she’s not concerned about being replaced by AI yet, but some digital rivals have already started appearing on the scene.

In another viral story about AI taking human jobs away, Rest of World reports that AI-produced artwork is already impacting the Chinese gaming industry. One freelance illustrator told the publication that nearly all of her gaming work has dried up, and she’s more frequently employed now to tweak or clean up AI-generated imagery than create original artwork herself, at a tenth of her previous pay rate. Another Chinese game studio told the site that five of their 15 character design-focused illustrators have been laid off so far this year.

Over in Vox, reporter Sigal Samuel worries that – over a long enough timeline – chatbots like ChatGPT could more generally homogenize our world and flatten out human creativity. Already, a significant amount of online text is now composed by chatbots. As future chatbots are trained using published content from the internet, this means that – in the near future – robots will learn how to write from other robots. Could this mean the permanent end of original thought, as we continually rewrite, rearrange, and recompile ideas that were already published in the past?

Geopolitical Concerns Around AI Continue to Grow

Only if humanity survives for long enough! An item this week from Foreign Policy notes that AI could completely alter geopolitics and warfare, and features a number of chilling predictions about the use of dystopian tech like automated drones, AI-driven software that helps leaders make strategic and tactical decisions, and even AI upgrades that make existing weapons systems more potent and powerful. A February report by the Arms Control Association warns that AI could potentially expand the capability of existing weapons like hypersonic missiles to the point of “blurring the distinction between a conventional and nuclear attack.”

Finally, in an acknowledgment about the potential AI consequence that’s always on everyone’s mind, we recently witnessed the debut of ChaosGPT, an experimental open-source attempt to encourage OpenAI’s ChatGPT-4 to lay out a plan for global domination. After removing the OpenAI guardrails that prohibit these specific lines of inquiry, the ChaosGPT team worked with ChatGPT-4 on an extensive plan for humanity’s destruction, which involved both generating support for its plans on social media and acquiring nuclear weapons. Though ChaosGPT had a number of interesting ideas, such as coordinating with other GPT systems to forward its goal, the program ultimately didn’t manage to actually devise a workable plan to take over the planet and kill all the humans. Oh well, next time.

- Art Created By Artificial Intelligence Can’t Be Copyrighted, US Agency Rules ›

- Would Biden's Proposed AI 'Bill of Rights' Be Effective—Or Just More Virtue Signaling? ›

- Is AI Making the Creative Class Obsolete? ›

- AI Chatbots Aren’t 'Alive.' Why Is the Media Trying to Convince Us Otherwise? ›

- Writers Are Fighting To Save Their Jobs From AI Chatbots. - dot.LA ›

- Is Public Interest in AI is Shifting? - dot.LA ›

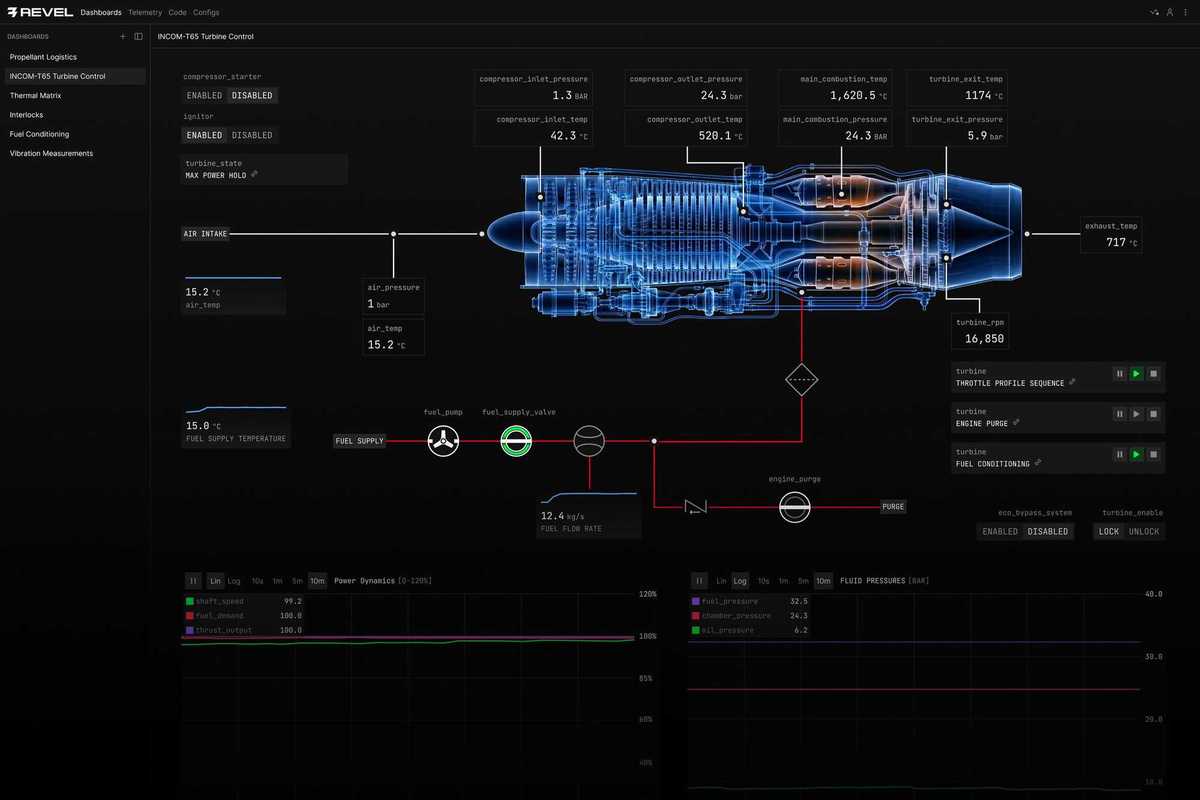

Image Source: Revel

Image Source: Revel