'Open Letter' Proposing 6-Month AI Moratorium Continues to Muddy the Waters Around the Technology

This is the web version of dot.LA’s daily newsletter. Sign up to get the latest news on Southern California’s tech, startup and venture capital scene.

AI continues to dominate the news – not just within the world of technology, but mainstream news sources at this point – and the stories have entered a by-now familiar cycle. A wave of exciting new developments, releases and viral apps is followed by a flood of alarm bells and concerned op-eds, wondering out loud whether or not things are moving too fast for humanity’s own good.

With OpenAI and Microsoft’s GPT-4 arriving a few weeks ago to massive enthusiasm, we were overdue for our next hit of jaded cynicism, warning about the potentially dire impact of intuitive chatbots and text-to-image generators.

Sure enough, this week, more than 1,000 signatories released an open letter calling for all AI labs to pause training any new systems more powerful than GPT-4 for six months.

What does the letter say?

The letter calls out a number of familiar concerns for anyone who has been reading up on AI development this past year. On the most immediate and practical level, it cautions that chatbots and automated text generators could potentially eliminate vast swathes of jobs previously filled by humans, while “flood[ing] our information channels with propaganda and untruth.” The letter then continues into full apocalypse mode, warning that “nonhuman minds” could eventually render us obsolete and dominate us, risking “loss of control of our civilization.”

The six-month break, the signatories argue, could be used to jointly develop shared safety protocols around AI design to ensure that they remain “safe beyond a reasonable doubt.” They also suggest that AI developers work in collaboration with policymakers and politicians to develop new laws and regulations around AI and AI research.

The letter was signed by several AI developers and experts, along with tech industry royalty like Elon Musk and Steve Wozniak. TechCrunch does point out that no one from inside OpenAI seems to have signed it, nor Anthropic, a group of former OpenAI developers who left to design their own “safer” chatbots. OpenAI CEO Sam Altman did speak to the Wall Street Journal this week in reference to the letter, noting that the company has not yet started work on GPT-5 and that time for safety tests has always been built into their development process. He referred to the letter’s overall message as “preaching to the choir.”

Critics of the letter

The call for an AI ban was not without critics, though. Journalist and investor Ben Parr noted that the vague language makes it functionally meaningless, without any kind of metrics to gauge how “powerful” an AI system has become or suggestions for how to enforce a global AI ban. He also notes that some signatories, including Musk, are OpenAI and ChatGPT competitors, potentially giving them a personal stake in this fight beyond just concern for the future of civilization. Others, like NBC News reporter Ben Collins, suggested that the dire AI warnings could be a form of dystopian marketing.

On Twitter, entrepreneur Chris Pirillo noted that “the genie is already out of the bottle” in terms of AI development, while physicist and author David Deutsch called out the letter for confusing today’s AI apps with the Artificial General Intelligence (AGI) systems still only seen in sci-fi films and TV shows.

Legitimate red flags

Obviously, the letter speaks to relatively universal concerns. It’s easy to imagine why writers would be concerned by, say, BuzzFeed now using AI to write entire articles and not just quizzes. (The website isn’t even using professional writers to collaborate with and copy-edit the software anymore. The new humans helping out “Buzzy the Robot” to compose its articles are non-editorial employees from the client partnership, account management, and product management teams. Hey, it’s just an “experiment,” freelancers!)

But it does once more raise some red flags about the potentially misleading ways that some in the industry and the media are discussing AI, which continues to make these kinds of high-level discussions around the technology more cumbersome and challenging.

A recent viral Twitter thread credited ChatGPT-4 with saving a dog’s life, leading to a lot of breathlessly excited coverage about how computers were already smarter than your neighborhood veterinarian. The owner entered the dog’s symptoms into the chatbot, along with copies of its blood work, and ChatGPT responded with the most common potential ailments. As it turns out, a live human doctor tested the animal for one of the bot’s suggested illnesses and accurately guessed the diagnosis. So the computer is, in a very real sense, a hero.

Still, considering what might be wrong with dogs based on their symptoms isn’t what ChatGPT does best. It’s not a medical or veterinary diagnostic tool, and it doesn’t have a database of dog ailments and treatments at the ready. It’s designed for conversations, and it’s just guessing as to what might be wrong with the animal based on the texts on which it was trained, sentences and phrases that it has seen connected in human writing in the past. In this case, the app guessed correctly, and that’s certainly good news for one special pupper. But there’s no guarantee it would get the right answer every time, or even most of the time. We’ve seen a lot of evidence that ChatGPT is perfectly willing to lie, and can’t actually tell the difference between truth and a lie.

There’s also already a perfectly solid technology that this person could have used to enter a dog’s symptoms and research potential diagnoses and treatments: Google search. A search results page also isn’t guaranteed to come up with the correct answer, but it’s as if not more reliable in this particular use case than ChatGPT-4, at least for now. A quality post on a reliable veterinary website would hopefully contain similar information to the version ChatGPT pulled together, except it would have been vetted and verified by an actual human expert.

Have we seen too many sci-fi movies?

A response published in Time by computer scientist Eliezer Yudkowsky – long considered a thought leader in the development of artificial general intelligence – argues that the open letter doesn’t go far enough. Yudkowsky suggests that we’re currently on a path toward “building a superhumanly smart AI,” which will very likely result in the death of every human being on the planet.

No, really, that’s what he says! The editorial takes some very dramatic turns that feel pulled directly from the realms of science-fiction and fantasy. At one point, he warns: “A sufficiently intelligent AI won’t stay confined to computers for long. In today’s world you can email DNA strings to laboratories that will produce proteins on demand, allowing an AI initially confined to the internet to build artificial life forms or bootstrap straight to postbiological molecular manufacturing.” This is the actual plot of the 1995 B-movie “Virtuosity,” in which an AI serial killer app (played by Russell Crowe!) designed to help train police officers grows his own biomechanical body and wreaks havoc on the physical world. Thank goodness Denzel Washington is around to stop him.

And, hey, just because AI-fueled nightmares have made their way into classic films, that doesn’t mean they can’t also happen in the real world. But it nonetheless feels like a bit of a leap to go from text-to-image generators and chatbots – no matter how impressive – to computer programs that can grow their own bodies in a lab, then use those bodies to take control of our military and government apparatus. Perhaps there’s a direct line between the experiments being done today and truly conscious, self-aware, thinking machines down the road. But, as Deutsch cautioned in his tweet, it’s important to remember that AI and AGI are not necessarily the exact same thing.

- Would Biden's Proposed AI 'Bill of Rights' Be Effective—Or Just More Virtue Signaling? ›

- Prediction: AI Is Just Getting Started. In 2023, It Will Begin to Power Influencer Content ›

- Vocal Deepfakes Are Here To Make You Question Who You're Listening To ›

- Is AI Making the Creative Class Obsolete? ›

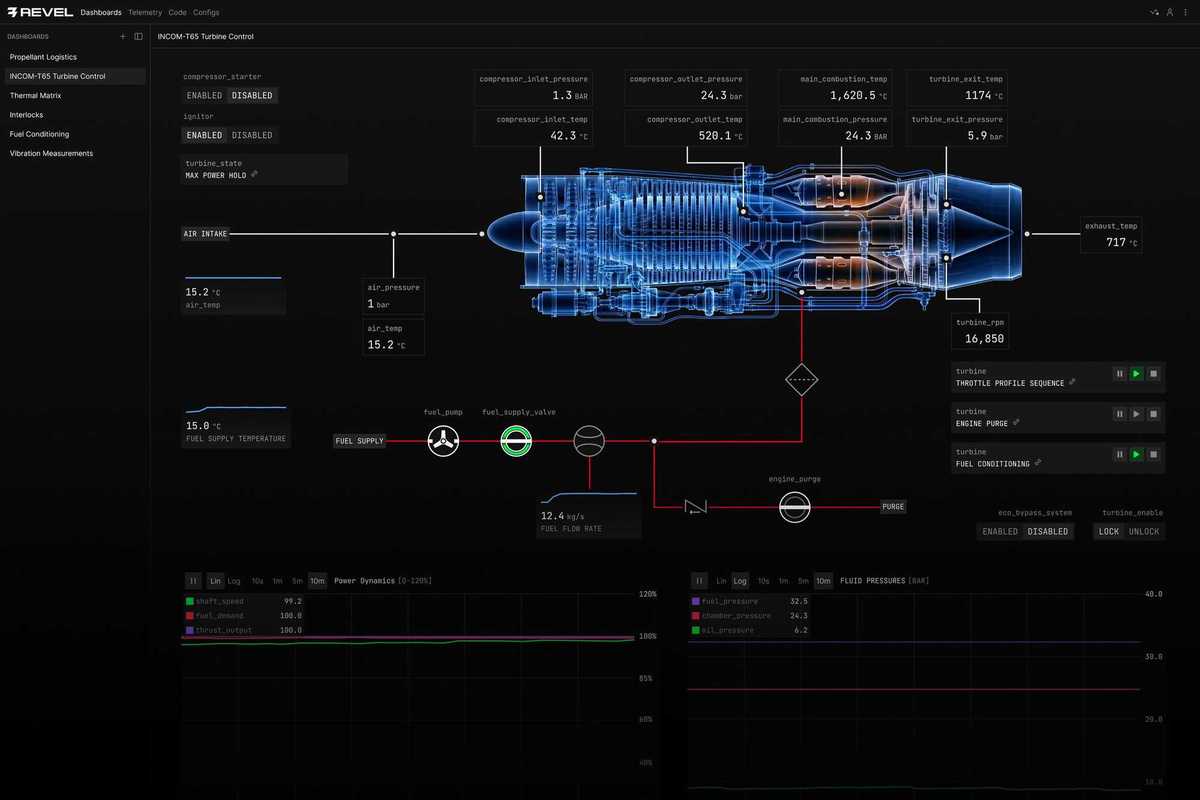

Image Source: Revel

Image Source: Revel