How Social Media Moderation Might Be Legislated After the Capitol Attack

Sam primarily covers entertainment and media for dot.LA. Previously he was Marjorie Deane Fellow at The Economist, where he wrote for the business and finance sections of the print edition. He has also worked at the XPRIZE Foundation, U.S. Government Accountability Office, KCRW, and MLB Advanced Media (now Disney Streaming Services). He holds an MBA from UCLA Anderson, an MPP from UCLA Luskin and a BA in History from University of Michigan. Email him at samblake@dot.LA and find him on Twitter @hisamblake

Calls are mounting among lawmakers to ramp up regulation of social media following the violent takeover of the U.S. Capitol last week.

Facebook, Amazon and a slew of other tech companies have locked President Trump's account and given the boot to right-wing site Parler.

But Bay Area Congresswoman Anna Eshoo, a Democrat on the Energy and Commerce committee, calls the move by tech giants too little, too late and says "Congress and the administration must take swift and bold action."

"These companies have demonstrated they will not do the right thing on their own," she said.

The problems that social media can create or exacerbate, including spreading misinformation and breeding terrorism, are well known. But it's tricky regulatory territory, cutting across issues of free speech, data privacy, market competition and the responsibility that companies should have over the content on their platforms.

Eshoo sat on the powerful Communications & Technology subcommittee in the last Congressional session, during which she and fellow Democratic Congressman Tom Malinowksi of New Jersey introduced legislation to amend Section 230. The law governs the liability of internet intermediaries including social media companies for the content users publish on their platforms. The amendment would increase sites' liability for how their algorithms spread harmful or radicalizing content that leads to offline violence.

Facebook and Twitter's move to suspend Trump's account, she said, was "a few hours too late to thwart the failed coup attempt...and years too late to avoid the harm done to our democracy."

Eshoo said in a statement she will revive the bill in this legislative session and update it.

The Possible Changes to Section 230

Enacted in 1996 as part of the Communications Decency Act, Section 230 says interactive computer services like social media websites are not to be considered publishers of, and therefore should not be held liable for, the content that appears on their platforms. It also provides cover for "good faith" moderation of content that the provider or users deem objectionable.

Both sides of the political aisle have increasingly taken issue with the legislation, for different reasons.

Democrats tend to criticize 230 for absolving tech companies of the responsibility for policing their platforms. President-elect Biden has called for 230 to be revoked, saying social media companies are "propagating falsehoods they know to be false."

That could backfire, said Ángel Díaz of the Brennan Center for Justice, a nonpartisan law and policy think tank.

"The reality is Section 230 is precisely the law that gives the platforms flexibility to remove posts that may not necessarily be illegal but are objectionable in some way," he said.

President Trump began pushing for a Section 230 repeal after Twitter began fact-checking his tweets. His concerns echoed the objections other Republicans have made to the law, claiming it enables platforms to disproportionately censor conservative voices.

Such concerns speak to one of the thorny issues surrounding content moderation – balancing it with free speech. Although Section 230 aims to provide a legal framework for doing so, it does not specify what should and should not be censored, leaving room for debate of the sort that has unfolded across party lines.

Nor does Section 230 cover all the issues pertinent to potential social media regulation. Since the time the legislation was written, internet companies like Facebook, Amazon and Google have gobbled up huge markets, causing concerns among legislators and observers regarding fair market competition. And concern is growing about these companies' use of consumer data.

"The conversation needs to go beyond 230 to capture these other avenues that are really important for understanding the future of the internet," Díaz said.

Emma Llansó, director of the Center for Democracy & Technology's Free Expression Project, said "the attention Congress (and the Biden administration) will be paying will be as strong if not more intense in the wake of what happened at the Capitol."

Congress "needs to identify the specific problems and harms it's trying to address or prevent and come up with tailored legislative proposals."

But, she added, amending 230 alone won't resolve many of these issues and could create bigger problems.

Requiring platforms to moderate content with a fine-tooth comb, for instance, could give more established and better-funded social media sites such as Facebook a leg up on upstarts and make it impossible for new companies to get started.

"If you do regulation like 230 the wrong way you could just entrench the biggest players," Llansó said.

Other Content Moderation Laws in Development

One helpful way forward, said Díaz, could be to mandate more transparency from social media companies about their moderation policies and the outcomes of that moderation. Clearer public data, for example, "would help us get a better understanding of how much of a conservative bias there is or how much hate speech is targeting communities of color and being allowed to stay on the platform," he said.

In California, State Assemblymember Ed Chau of Monterey Park introduced a bill in December to address transparency from social media companies. Assembly Bill 35 would force social media platforms to disclose to users, in an easily accessible way, whether they have a policy or mechanism in place to address the spread of misinformation.

"The rioting upon our nation's Capital...was exacerbated by the spread of falsehoods and misinformation, some of which was disseminated via social media platforms," Chau said in a statement released last week.

"It is vital to ensure that information on these platforms, which many have come to rely upon, is accurate and factual," he said.

The bill would authorize levying $1,000 fines on social media platforms for each day they violate the disclosure requirement. That provision, however, could disproportionately burden smaller companies, Llansó said.

"You could imagine a site that doesn't know about this requirement finding out 90 days after they launch that they owe $90,000 in fines, which would be problematic for a small service," Llansó said, noting that even a blog with a comments section could be subject to the bill in its current form.

Another piece of legislation that may resurface in the new congressional session would establish a bipartisan National Commission on Online Platforms and Homeland Security to explore how social media platforms can spread violence. The bill was introduced by Mississippi Congressman Bennie Thompson in 2019, and received co-sponsorship from nearly twenty legislators on both sides of the aisle.

- How Trump's Order Could Impact The Fates of Snap, TikTok and ... ›

- Lawmakers Take Aim at Algorithms 'at Odds with Democracy' - dot.LA ›

- Lawmakers Take Aim at Algorithms 'at Odds with Democracy' - dot.LA ›

- What is Section 230? - dot.LA ›

- Facebook Fails to Stop Spanish-Language Misinformation - dot.LA ›

- Facebook, Google Execs Admit No Blame For Capitol Attack - dot.LA ›

- Snapchat Accused of Being an 'Ecommerce' Site for Fentanyl - dot.LA ›

- Banning Snapchat Drug Sales Is 'Top Priority,' Snap Says - dot.LA ›

- SCOTUS Rulings To Potentially Reshape Internet Content - dot.LA ›

Sam primarily covers entertainment and media for dot.LA. Previously he was Marjorie Deane Fellow at The Economist, where he wrote for the business and finance sections of the print edition. He has also worked at the XPRIZE Foundation, U.S. Government Accountability Office, KCRW, and MLB Advanced Media (now Disney Streaming Services). He holds an MBA from UCLA Anderson, an MPP from UCLA Luskin and a BA in History from University of Michigan. Email him at samblake@dot.LA and find him on Twitter @hisamblake

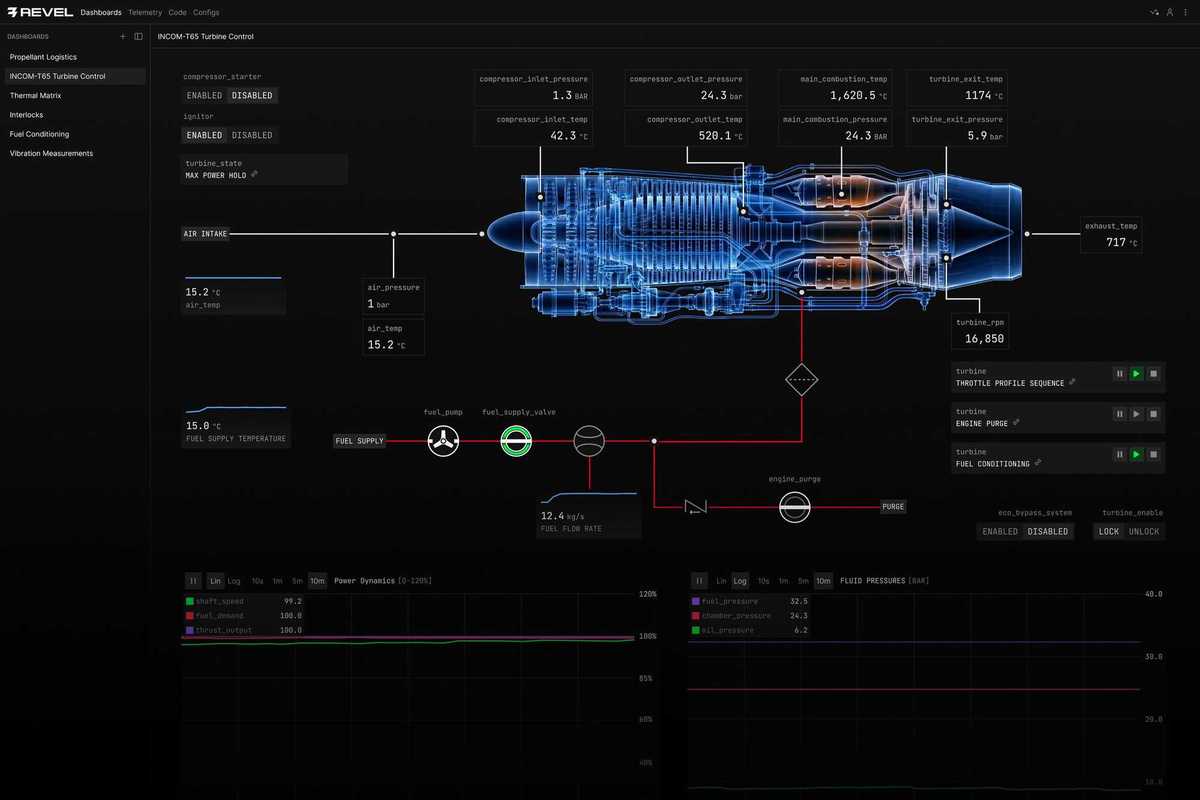

Image Source: Revel

Image Source: Revel