Watch: The Future of Content Moderation Online

Annie Burford is dot.LA's director of events. She's an event marketing pro with over ten years of experience producing innovative corporate events, activations and summits for tech startups to Fortune 500 companies. Annie has produced over 200 programs in Los Angeles, San Francisco and New York City working most recently for a China-based investment bank heading the CEC Capital Tech & Media Summit, formally the Siemer Summit.

As Big Tech cracks down on moderation after the Capitol attack and Wall Street braces for more fallout from social media's newfound influence on stock trading,

legislators are eyeing changes to Section 230 of the Communications Decency Act of 1996. On Wednesday, February 10, dot.LA brought together legal perspectives and the views of a founder and venture capitalist on the ramifications of changing the way that social media and other internet companies deal with the content posted on their platforms.

A critic of Big Tech moderation, Craft Ventures General Partner and former COO of PayPal David Sacks called for an amendment of the law during dot.LA's Strategy Session Wednesday. Tyler Newby and Andrew Klungness, both partners at law firm Fenwick, laid out the potential legal implications of changing the law.

Section 230 limits the liability of internet intermediaries, including social media companies, for the content users publish on their platforms.

"Mend it, don't end it," Sacks said.

Sacks said he's concerned about censorship in the wake of companies tightening moderation policies. In the case of Robinhood's recent decision to freeze users from trading certain stocks— including GameStop's — for a period of time, he said we're now seeing discussions about Big Tech's role in censorship unfold in nonpartisan settings.

"Who has the power to make these decisions?" he said. "What concerns me today is that Big Tech has all the power."

Social media sites including Twitter pulled down former President Trump's account after last month's attack on the U.S. Capitol. But critics have said that these sites didn't go far enough in stopping conversations that provoked the violence.

To provide some external standard, he called for the "reestablishment of some First Amendment rights in this new digital public square" — which is to say, on privately owned platforms.

Newby pointed to a series of recent bills aimed at reigning in the power of tech companies. Changes to moderation laws could have sweeping impacts on more companies than giants like Facebook or Twitter.

"It's going to have a huge stifling effect on innovation," said Klungness, referring to a possible drop in venture capital to new startups. "Some business models may be just simply too risky or may be impractical because they require real-time moderation of content."

And if companies are liable for how their users behave, Klungness said, some companies may never take the risk in launching these companies at all. "Some business models may be just simply too risky or may be impractical because they require real-time moderation of content," he said.

Watch the full discussion below.

Strategy Session: The Future of Content Moderation Online

David Sacks, Co-Founder and General Partner of Craft Ventures

David Sacks, Co-Founder and General Partner of Craft Ventures

David Sacks is co-founder and general partner at Craft. He has been a successful tech entrepreneur and investor for two decades, building and investing in some of the most iconic companies of the last 20 years. David has invested in over 20 unicorns, including Affirm, Airbnb, Bird, Eventbrite, Facebook, Houzz, Lyft, Opendoor, Palantir, Postmates, Reddit, Slack, SpaceX, Twitter and Uber.

In December 2014, Sacks made a major investment in Zenefits and became the company's COO. A year later, in the midst of a regulatory crisis, the Board asked David to step in as interim CEO of Zenefits. During his one year tenure, David negotiated resolutions with insurance regulators across the country, and revamped Zenefits' product line. By the time he left, regulators had praised David for "righting the ship", and PC Magazine hailed the new product as the best small business HR system.

David is well known in Silicon Valley for his product acumen. AngelList's Naval Ravikant has called David "the world's best product strategist." David likes to begin any meeting with a new startup by seeing a product demo.

Kelly O'Grady, Chief Correspondent & Host and Head of Video at dot.LA

Kelly O'Grady is dot.LA's chief host & correspondent. Kelly serves as dot.LA's on-air talent, and is responsible for designing and executing all video efforts. A former management consultant for McKinsey, and TV reporter for NESN, she also served on Disney's Corporate Strategy team, focusing on M&A and the company's direct-to-consumer streaming efforts. Kelly holds a bachelor's degree from Harvard College and an MBA from Harvard Business School. A Boston native, Kelly spent a year as Miss Massachusetts USA, and can be found supporting her beloved Patriots every Sunday come football season.

Tyler Newby is a partner at Fenwick

Tyler Newby, Partner at Fenwick

Tyler focuses his practice on privacy and data security litigation, counseling and investigations, as well as intellectual property and commercial disputes affecting high technology and consumer-facing companies. Tyler has an active practice in defending companies in consumer class actions, state attorney general investigations and federal regulatory agency investigations arising out of privacy and data security incidents. In addition to his litigation practice, Tyler regularly advises companies large and small on reducing their litigation risk on privacy, data security and secondary liability issues. Tyler frequently counsels companies on compliance issues relating to key federal regulations such as the Children's Online Privacy Protection Act (COPPA), the Fair Credit Reporting Act (FCRA), the Computer Fraud and Abuse Act (CFAA), the Gramm Leach Bliley Act (GLBA), Electronic Communications Privacy Act (ECPA) and the Telephone Consumer Protection Act (TCPA).

In 2014, Tyler was named among the top privacy attorneys in the United States under the age of 40 by Law360. He currently serves as a Chair of the American Bar Association Litigation Section's Privacy & Data Security Committee, and was recently appointed to the ABA's Cybersecurity Legal Task Force. Tyler is a member of the International Association of Privacy Professionals, and has received the CIPP/US certification.

Andrew Klungness is a partner at Fenwick

Andrew Klungness, Partner at Fenwick

Leveraging nearly two decades of business and legal experience, Andrew navigates clients—at all stages of their lifecycles—through the opportunities and risks presented by novel and complex transactions and business models.

Andrew is a co-chair of Fenwick's consumer technologies and retail and digital media and entertainment industry teams, as well as a principal member of its fintech group. He works with clients in a number of verticals, including ecommerce, consumer tech, fintech, enterprise software, blockchain, marketplaces, CPG, mobile, AI, social media, games and edtech, among others.

Andrew leads significant and complex strategic alliances, joint ventures and other collaboration and partnering arrangements, which are often driven by a combination of technological innovation, industry disruption and rights to content, brands or celebrity personas. He also structures and negotiates a wide range of agreements and transactions, including licensing, technology sourcing, manufacturing and supply, channel partnerships and marketing agreements. Additionally, Andrew counsels clients in various intellectual property, technology and contract issues in financing, M&A and other corporate transactions.

Sam Adams, Co-Founder and CEO of dot.LA

Sam Adams, Co-Founder and CEO of dot.LA

Sam Adams serves as chief executive of dot.LA. A former financial journalist for Bloomberg and Reuters, Adams moved to the business side of media as a strategy consultant at Activate, helping legacy companies develop new digital strategies. Adams holds a bachelor's degree from Harvard College and an MBA from the University of Southern California. A Santa Monica native, he can most often be found at Bay Cities deli with a Godmother sub or at McCabe's with a 12-string guitar. His favorite colors are Dodger blue and Lakers gold.

- Lawmakers Take Aim at Algorithms 'at Odds with Democracy' - dot.LA ›

- How Trump's Order Could Impact The Fates of Snap, TikTok and ... ›

- How Social Media Moderation Might Be Legislated - dot.LA ›

- Counterpart Rakes in $10M to Help Insure Small Businesses - dot.LA ›

- Facebook, Google Execs Admit No Blame For Capitol Attack - dot.LA ›

- Event: Startup Coil Presents Clean Crypto - dot.LA ›

- Imgur's Plans to Get Rid of All NSFW Content - dot.LA ›

Annie Burford is dot.LA's director of events. She's an event marketing pro with over ten years of experience producing innovative corporate events, activations and summits for tech startups to Fortune 500 companies. Annie has produced over 200 programs in Los Angeles, San Francisco and New York City working most recently for a China-based investment bank heading the CEC Capital Tech & Media Summit, formally the Siemer Summit.

Francesca Billington is a freelance reporter. Prior to that, she was a general assignment reporter for dot.LA and has also reported for KCRW, the Santa Monica Daily Press and local publications in New Jersey. She graduated from Princeton in 2019 with a degree in anthropology.

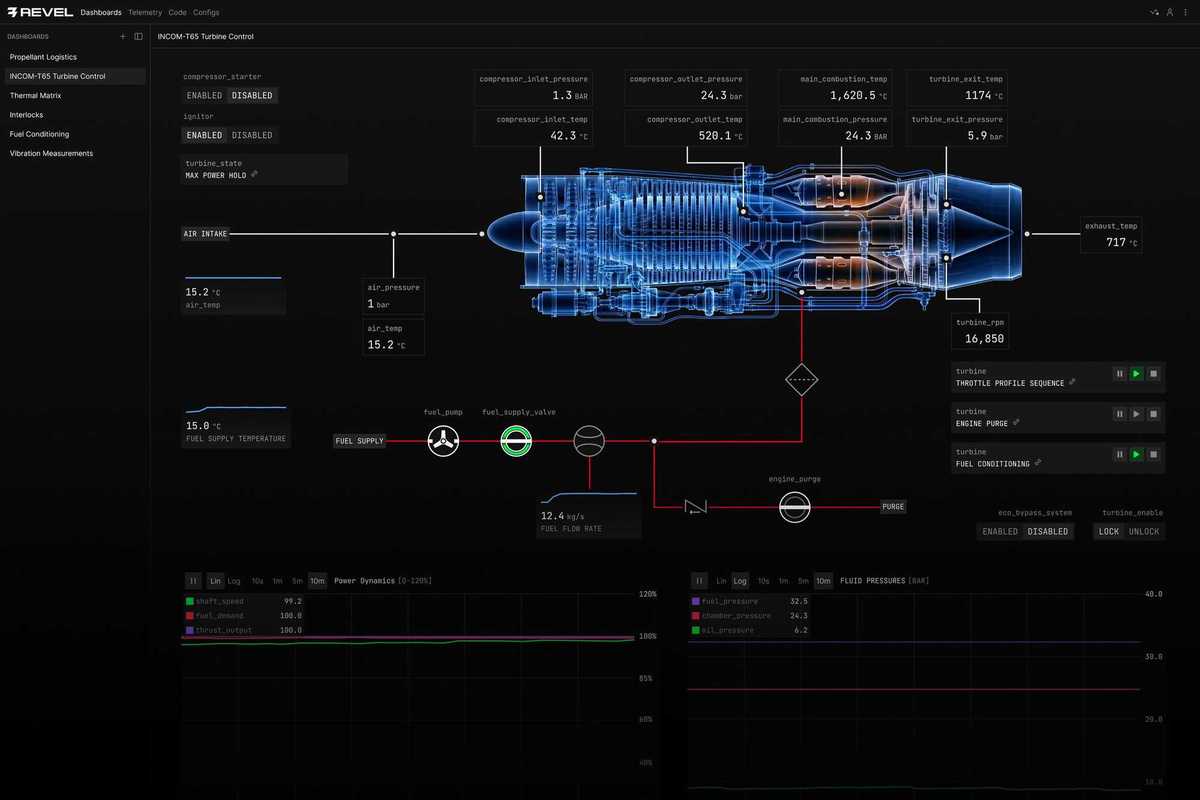

Image Source: Revel

Image Source: Revel