Ferret Wants To Be a ‘Knight in Shining Armor’ for Investors. Will Ethical Concerns Stand in Its Way?

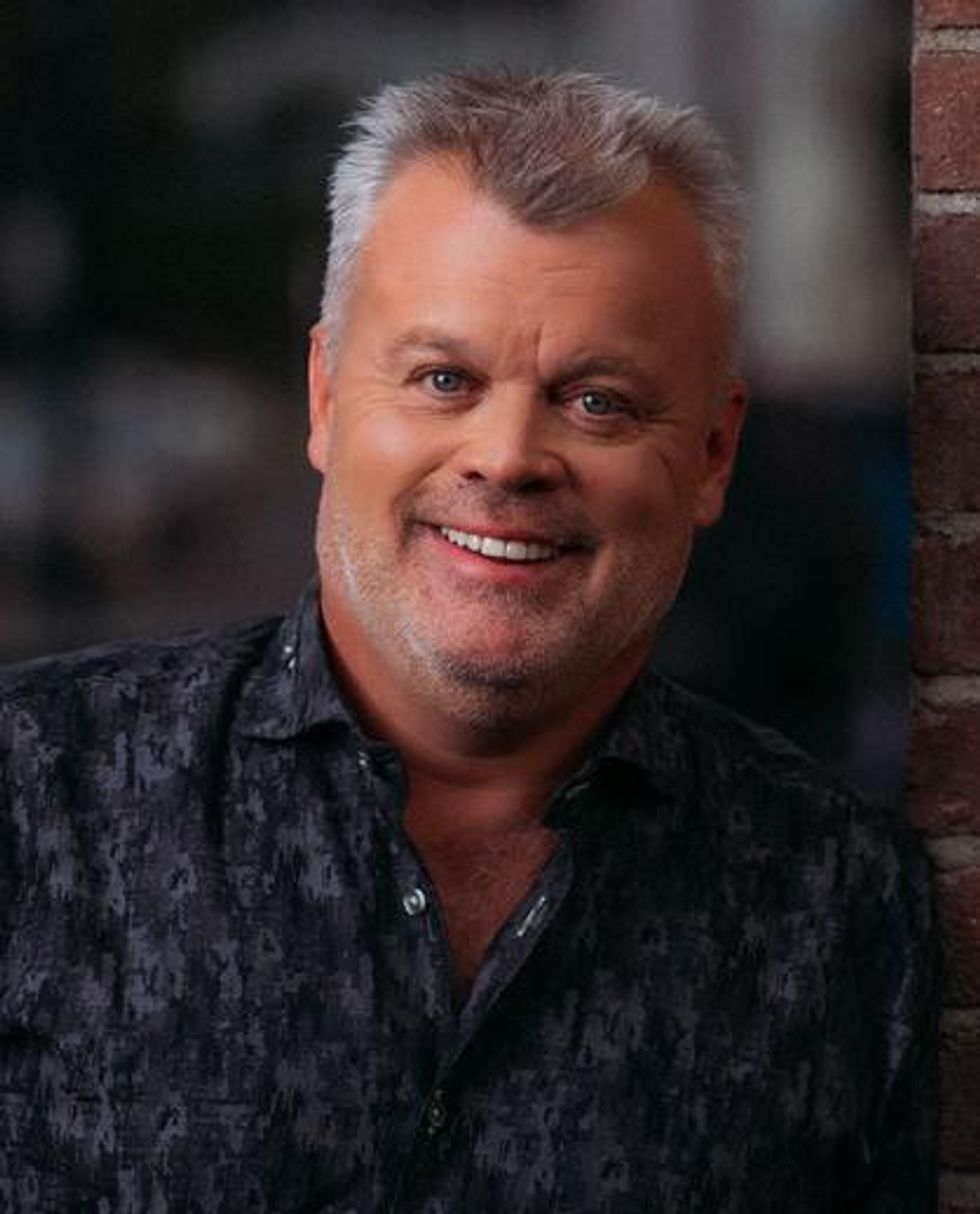

The way tech veteran and entrepreneur Rob Loughan explains it, he isn't in it for the money. Loughan, best known for founding Octane Software, which sold for $3.2 billion in 2001, wants to change how investors assess their personal and professional networks, despite critics' concerns.

"I want to be seen as kind of like the knight in shining armor, on the white horse, decreasing the amount of bad stuff happening in the world," said Loughan.

The 56-year-old uses an analogy to explain the benefits of his AI-enabled risk analysis tool: an open house. Several, maybe dozens, of people are walking through this person's home, where all of their valuable personal belongings are. What if they've unknowingly let a thief inside?

Ferret, he said, can spot them. The Calabasas-based company uses AI to help users identify risks within their networks or even neighborhoods. Its software scans a person's contacts and makes those individuals' backgrounds, including criminal records, available to users. It's geared toward investors and high-net worth individuals that often go in on high-stakes deals.

Ferret co-founder Rob Loughan

"The next person can pull up [to your home] in a Maserati and have a Gucci suit. They could have been bankrupt three times, and they could even be a bad person that has a bunch of fraud behind them," said Loughan. "And then there's someone like me. Looks disheveled, probably hasn't shaved in three days, my T-shirt has holes in it. [...] I might get overlooked because of the way that I presented myself."

The company was started in 2020 by Loughan and his co-founder, Al Macdonald. Macdonald is the founder and CEO of NominoData, a technology company that has been providing the financial industry with risk management data for the past 12 years.

Loughan said he started Ferret "by accident" after he invested in NominoData and Macdonald asked him for help selling the company.

"I said, 'Don't sell it'," said Loughan. "'We're gonna democratize that data, and we're going to give it to everybody instead of just the ivory tower banks and financial institution governments who seem to know everything about us, but we don't know anything about each other'."

What emerged was a technology that can put NominoData into anybody's hands. The app, which is currently in alpha testing, uses AI to determine which people are within the user's network -- be it coworkers, friends, neighbors -- and provides easy access to publicly available information on them from resources like court records and news archives. Users can also search for specific people outside of their network.

The data shown on each individual excludes misdemeanor offenses such as DUIs or marijuana possession charges, focusing instead on serious cases that are relevant to investors.

"What matters to [investors] are lawsuits, government licenses, past exit successes, fraud allegations and white collar crime," said Matt Heisie, Ferret's head of product. "Search engines and background checks are bombarded with sensational arrest records or negative news, while serious white collar crime typically stays in the shadows. Ferret changes that dynamic."

Ferret acts somewhat like a search engine to make it easier to obtain information that is publicly available but difficult to find.

Ferret can, and -- in Loughan's eyes-- likely will, be used for personal matters outside of the investment world; in fact, Ferret is currently in contact with five dating sites, looking to make their data available to users. Certain information the app collects, such as battery charges, could be important to an online dater trying to feel out a potential mate.

Privacy Experts Weigh In

Ferret announced a $4 million seed round last month, with the Australian investment firm Artesian and more than 30 angel investors participating. Despite the interest, Loughan admits every potential investor he has talked to expressed concern over the app's legality. It also raises moral questions about whether a company should be able to potentially trap someone in their past failures, even when that person may have atoned for them.

From the beginning, Loughan said Ferret has been cautious to make sure their product is legal, going so far as working with a global law firm that the company declined to name.

"We're unlikely to lose a lawsuit because we're so fastidious about doing it properly," said Loughan, who said unlike Facebook, which has come under fire for its collection of users' personal information, Ferret has no nefarious intentions and nothing to gain from misusing data.

John Davisson, senior counsel at the Electronic Privacy Information Center (EPIC), a nonprofit research organization in Washington, DC that focuses on privacy rights, pointed out two laws in particular that he said Ferret will inevitably have to comply with in order to do this legally: the Fair Credit Reporting Act and the California Consumer Privacy Act.

The laws limit what credit reporting agencies and businesses can do with the information they collect on people. Ferret maintains that the FCRA does not apply to them because they are not a credit or consumer reporting agency.

The laws also prohibit certain uses of the information the app collects, such as employing it to make hiring decisions. Ferret said that the data they provide is not the same as that of a traditional background check.

The startup said it makes users abide by terms of use that are specifically designed to make users aware of what they can and cannot do with the app.

"It's not just like, 'Do you agree to these terms and conditions?' and there's some infinitely long page that nobody reads," said Heisie. "They have to affirmatively agree to those individual points before they get into the application. It's very clear, too, that violation of the terms and conditions will just result in suspension of their use of the application for them immediately."

Jay Stanley, a senior policy analyst with the American Civil Liberties Union's "Speech, Privacy and Technology" program said that in the past, "practical obscurity" of personal information -- the concept that public information is not always easily accessible -- has indirectly protected privacy, but much of that has gone away in the digital age.

"While you undoubtedly have a First Amendment right to talk about what people have done in the past and what the records are about people, by systematizing it you're also making it harder for people to escape their past and start over," said Stanley.

'Not Trying to Point Fingers'

Ferret said it makes every effort to maintain privacy and fairness for those whose backgrounds are collected in its app.

Details of its artificial intelligence system are secret, but the company said it is planning to publish a white paper that lays out their AI framework and gives a statement of ethics for all to scrutinize.

What makes Ferret different, Heisie said, is that it's not a catch-all record scraper. Instead, it targets information relevant to the businesspeople who use it.

"It starts with what goes in," said Heisie. "It starts with trying to identify what actually is relevant from a business context and deprioritizing what's not, and using that as the beginning of the algorithms."

But the algorithms that make up artificial intelligence can be tainted by the developers' bias, influencing their output. Electronic Privacy Information Center's Davisson said he is not convinced that AI is at the point yet where it can perform in an unbiased way.

"These tools frequently develop and encode gender biases, racial biases, ethnic biases," said Davisson. "And especially something that's trying to make reputational judgments based on news coverage, which is a spectrum of sources that is obviously susceptible to human bias. Those same biases can creep into what they are claiming is an unbiased system."

As an example of this in the works, Davisson cited a recruitment AI system formerly used by Amazon that was proven a few years ago to be strongly biased against female candidates, filtering out resumes that included the word "women's" and listed certain female-only colleges.

Loughan is confident in his team's ability to make Ferret a service that is lawful and free of bias, but he is also prepared for pushback, possibly even lawsuits.

"I want to be seen as someone who's trying to make the world a better place, not trying to point fingers at people and say they're bad, because we don't do that," Loughan said. "We just show the data that's publicly available, and then you come to your own conclusion about the person."

- Open Raven Data Security Firm Raises $4.1 Million - dot.LA ›

- Anduril Industries is Building Border Surveillance Tech - dot.LA ›

Image Source: Revel

Image Source: Revel