🔦 Spotlight

Hello Los Angeles, happy Friday and happy Valentine’s Day weekend.

While the rest of us are debating flowers vs. gifts vs. reservations, LA’s infrastructure nerds are out here celebrating a different kind of romance: finding leaks before they ghost your entire operation.

Zeitview just made methane a first-class feature

Zeitview has acquired Insight M, folding high-frequency aerial methane detection into its broader “see it, measure it, fix it” play for critical infrastructure. The combined offering pairs methane monitoring with Zeitview’s predictive asset-health and inspection workflows, so operators can spot emissions faster, prioritize repairs, and tie results back to ROI instead of vibes.

What Zeitview actually does, beyond the buzzwords

If you haven’t been tracking them, Zeitview is essentially the operating layer for inspecting big, physical assets using drones, aircraft, and computer vision. They can analyze imagery you already have or capture fresh data, then turn it into inspection reports and analytics through their Asset Insights platform.

Zeitview was previously known as DroneBase and rebranded after raising an expansion round, signaling a broader push beyond “drones” into enterprise-grade infrastructure intelligence across energy and other asset-heavy industries.

Why Insight M fits, and why this isn’t just “climate tech”

Methane is the rare climate problem that also hits the P&L, because a leak is both emissions and lost product. Insight M has built credibility around methane monitoring that’s meant to be operational, not just observational, and that plugs neatly into Zeitview’s inspection footprint.

Put together, this looks less like a single acquisition and more like a workflow upgrade: one system that finds a problem, quantifies it, routes it to the right team, and proves it was fixed. The least romantic Valentine’s message of all, maybe, but also the most adult: “I noticed something small, and I handled it before it became expensive.”

Keep scrolling for the latest LA venture rounds, fund news and acquisitions.

🤝 Venture Deals

LA Companies

- HAWKs (Hiking Adventures With Kids), a nature-based children’s enrichment brand founded in Los Angeles, secured a strategic investment from Post Investment Group to accelerate its nationwide franchise expansion. The company plans to scale its mobile, outdoor-program model (after-school adventures, camps, and weekend sessions) by opening franchise territories across the U.S. while using Post’s franchising platform to build the operational infrastructure and support system for new operators. - learn more

LA Venture Funds

- Allomer Capital Group participated in TRUCE Software’s newly closed Series B, a round led by Yttrium with additional backing from New Amsterdam Growth Capital. The company did not disclose the amount, but says it will use the funding to scale go-to-market for two mobile-first product suites: an AI video telematics platform for commercial fleets that runs on standard smartphones, and TRUCE Family, a software approach to limiting student phone distractions in K–12 schools. - learn more

- Wonder Ventures participated in The Biological Computing Company’s $25M seed round, which was led by Primary Venture Partners alongside Builders VC, Refactor Capital, E1 Ventures, Proximity, and Tusk Ventures. The startup is commercializing “biological compute,” connecting living neurons to modern AI systems to make certain tasks dramatically more energy-efficient, and says its first product shows a 23x retained improvement in video model efficiency while also helping discover new AI architectures. - learn more

- Bonfire Ventures co-led Santé’s $7.6M seed round, with backing from Operator Collective, Y Combinator, and Veridical Ventures. Santé is building an AI- and fintech-driven operating system for wine and liquor retailers that brings POS, inventory, e-commerce, delivery orders, and invoice workflows into one platform to replace a lot of manual, fragmented processes. - learn more

- B Capital co-led Apptronik’s initial 2025 Series A and participated again in the company’s new $520M Series A extension, bringing the total Series A to $935M+ (nearly $1B raised overall). The company says it will use the fresh capital to ramp production and deployments of its Apollo humanoid robots and invest in facilities for robot training and data collection, with the extension also bringing in new backers like AT&T Ventures, John Deere, and Qatar Investment Authority alongside repeat investors including Google and Mercedes-Benz. - learn more

- WndrCo participated in Inertia Enterprises’s new $450M Series A, a round led by Bessemer Venture Partners with additional investors including GV, Modern Capital, and Threshold Ventures. The company says it will use the milestone-based financing to commercialize laser-based fusion built on physics proven at the National Ignition Facility at Lawrence Livermore National Laboratory, including building its “Thunderwall” high-power laser system and scaling a production line to mass-manufacture fusion fuel targets. - learn more

- Riot Ventures participated as a returning investor in Integrate’s $17M Series A, which was led by FPV Ventures with participation from Fuse VC and Rsquared VC. Integrate is pitching an ultra-secure project management platform built for classified, multi-organization programs, and says it has become a requirement for certain U.S. Space Force launch efforts. The company plans to use the new funding to ship additional capabilities for government customers and scale go-to-market across the defense tech sector. - learn more

- MANTIS Ventures participated in Project Omega’s $12M oversubscribed seed round, which was led by Starship Ventures alongside Buckley Ventures, Decisive Point, Slow Ventures, and others. Project Omega is emerging from stealth to build an end-to-end nuclear fuel recycling capability in the U.S., aiming to turn spent nuclear fuel into long-duration power sources and critical materials, with early lab demonstrations underway and an ARPA-E partnership to validate a commercially viable recycling pathway. - learn more

- Plus Capital participated in Garner Health’s $118M round, which was led by Khosla Ventures with additional backing from Founders Fund and existing investors including Maverick Ventures and Thrive Capital, valuing the company at $1.35B. Garner says it helps employers steer members to high-quality doctors using its “Smart Match” provider recommendations and a reimbursement-style incentive called “Garner Rewards,” and it will use the funding to expand its offerings, grow its care team, and scale partnerships with payers and health systems. - learn more

- Emerging Ventures co-led Taiv’s $13M Series A+ alongside IDC Ventures, with continued support from investors including Y Combinator and Garage Capital. Taiv says it will use the funding to scale its “Business TV” platform, which uses AI to detect and swap TV commercials in venues like bars and restaurants with more relevant ads and on-screen content, as it expands across major North American markets. - learn more

LA Exits

- Mattel163 is being acquired by Mattel, which is buying out NetEase’s remaining 50% stake and valuing the mobile games studio at $318M. The deal gives Mattel full ownership and control of the team behind its IP driven mobile titles, strengthening its in-house publishing and user acquisition capabilities as it expands its digital games business. - learn more

- DJ Mex Corp. is set to be acquired in part by Marwynn Holdings, which signed a non-binding letter of intent to purchase a 51% stake in the U.S.-based e-waste sourcing and logistics company. The deal would bring DJ Mex into Marwynn’s EcoLoopX platform to expand its asset-light “reverse supply chain” services for recyclable materials, though it’s still subject to due diligence and final agreements. - learn more

Download the dot.LA App

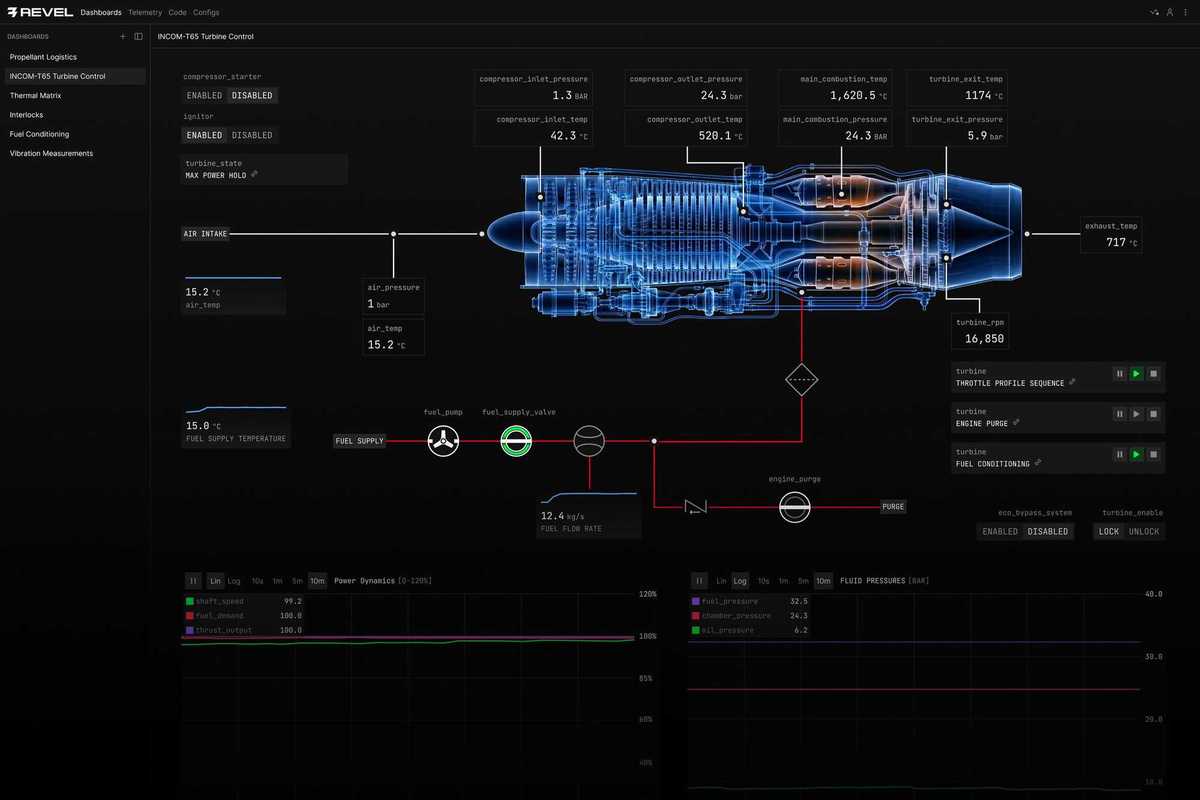

Image Source: Revel

Image Source: Revel