Discord Sued Over Child Sexual Exploitation

Kristin Snyder is dot.LA's 2022/23 Editorial Fellow. She previously interned with Tiger Oak Media and led the arts section for UCLA's Daily Bruin.

The Social Media Victims Law Center in California, a legal resource aiding parents whose children have been harmed by social media, is suing Discord, Roblox, Snap and Meta for allegedly enabling sexual exploitation.

The lawsuit, filed by the group, documents a 13-year-old girl's interactions with adult men on Roblox who encouraged her to converse on Discord, Instagram and Snapchat. The girl was encouraged to send explicit photos, drink alcohol and share money; which the lawsuit alleges led to suicide attempts.

Meta and Snap are no strangers to lawsuits of this kind. In the last year alone, Santa Monica-based Snapchat has faced multiple lawsuits regarding sexual exploitation. The most recent suit claims the company did not protect a teenage girl from sexual predators using the app.

In response, Snapchat has since tightened its parental controls. Meta, which is also facing lawsuits regarding children’s mental health and child exploitation, has introduced similar parent supervision tools across Instagram and its VR headsets. Experts have said that such controls, though a step in the right direction, still require diligence from parents instead of the platforms.

But for instant messaging platform Discord and online gaming platform Roblox, the lawsuits accuse the companies of harming children, marking a new chapter for both platforms despite their reputation for hosting a number of young users.

In the past, Roblox has been accused of failing to protect its young users from sexual predators. The platform has also struggled to monitor sexual content in its games, with some users building sexual game spaces for people to congregate. But up until now, neither issue has led to any formal legal proceedings.

Discord has also dealt with its fair share of scrutiny for not protecting children from adults sharing the same platform. Launched in 2015, the messaging platform gained a $15 billion valuation by fostering communities—gamers, in particular, have built up niche servers. Channel moderators are typically responsible for upholding community guidelines, though Discord does have a team who monitors user reports.

Its growing user base has, however, in recent years, concerned parents, who often find the platform difficult to use and thus struggle to monitor their children's accounts. As Discord has grown more popular in the past few years, many parents have spoken out about their children being groomed or sent sexually explicit messages through Discord.

Discord did not comment on the pending legislation, but a spokesperson told dot.LA, the company is proactively investigating and banning users and servers that violate its terms of service and community guidelines. Additionally, they say that the company saw a 29% increase in reports to the National Center for Missing & Exploited Children in Q1 of 2022 compared to Q4 of 2021.

“Discord has a zero-tolerance policy for anyone who endangers or sexualizes children,” the Discord spokesperson says. “We work relentlessly to keep this activity off our service and take immediate action when we become aware of it.”

Still, the platform’s problems extend beyond accusations of creating an environment for adults to exploit children. In 2017, White supremacist groups formed coalitions through Discord and used the app to recruit new members. Not to mention, the platform was used in planning the 2017 Unite the Right rally in Charlottesville. New York State Attorney General Letitia James is investigating Discord over how the Buffalo mass shooter used the app. According to court filings, the shooter had asked Discord users to review his plans in advance of the event.

In response to these events, in 2021, Discord removed 2,000 extremist communities from their platform which was a mere fraction of the 30,000 total communities Discord banned from the app that year. The largest violations continue to stem from exploitative content, which included revenge porn and sexually explicit content involving minors. In addition to removing communities, the platform has also partnered with internet safety nonprofit ConnectSafely to create a guide for parents monitoring their children's activity on the platform.

As more lawsuits target how social media potentially harms children, it’s at least clear that these companies will face further scrutiny to change the way they monitor their platforms—a move that many of the companies have in the past been reluctant to do.

- TikTok Facing Investigation Over Whether It Harms Children - dot.LA ›

- TikTok's Algorithm Spreads Misogynistic Videos - dot.LA ›

- California Bill To Let Parents Sue TikTok, Snap For Kids Social ... ›

- TikTok Creators Are Looking To Unionize - dot.LA ›

- Discord Acquires ‘Positive’ Social Media App Gas - dot.LA ›

Kristin Snyder is dot.LA's 2022/23 Editorial Fellow. She previously interned with Tiger Oak Media and led the arts section for UCLA's Daily Bruin.

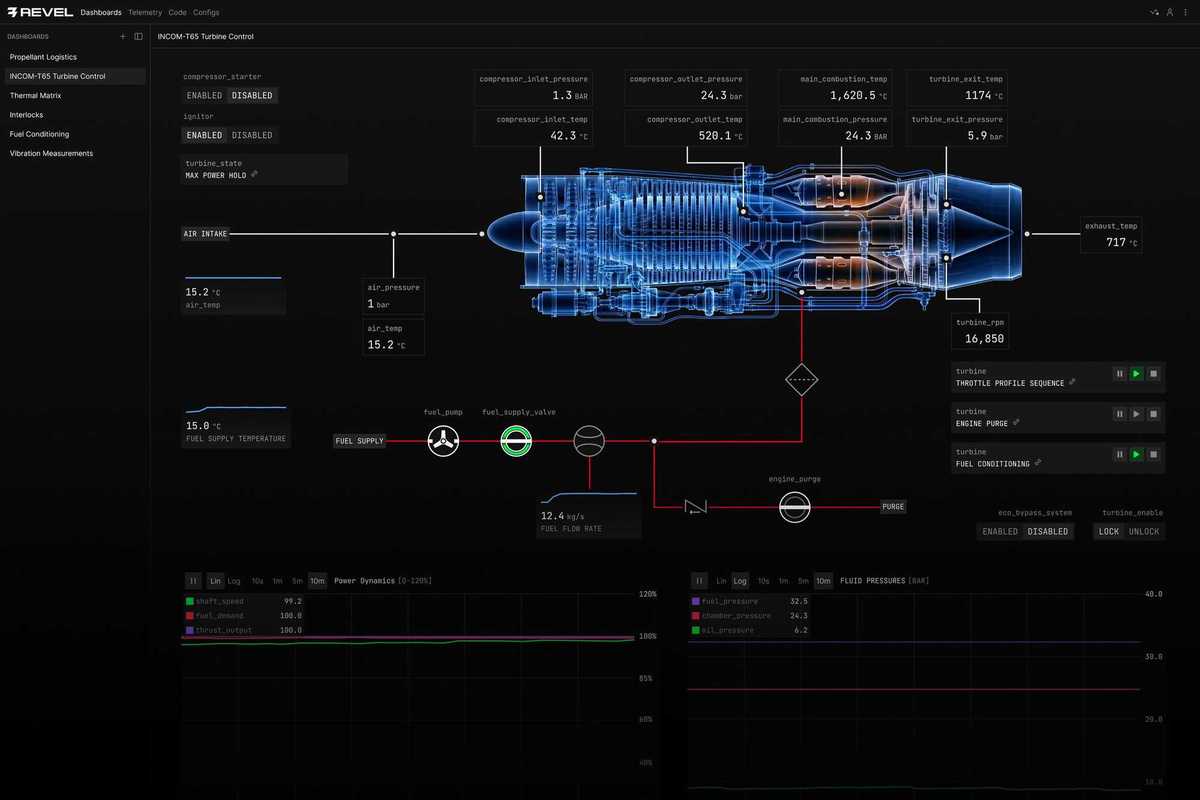

Image Source: Revel

Image Source: Revel