AI’s Marketing Push Gets An Assist from Congress

OpenAI CEO Sam Altman is testifying before the Senate Judiciary Committee this week about the promise and the potential drawbacks of AI, addressing concerns from lawmakers about whether the technology needs regulation and just what exactly that regulation might look like.

Senators expressed some common fears about AI. As a demonstration of how AI tools can already be used to create and spread misinformation, Democratic Connecticut Sen. Richard Blumenthal opened the hearing with a fake recording of his own voice. Democratic Sen. Gary Peters of Michigan agreed, noting that AI “is a work in progress” and that “regulations can be incredibly important, but they have to be smart.” Republican Sen. Marsha Blackburn of Tennessee – the heart of the country music industry – asked specifically about AI tools that draw on the previously published work of artists, singers, and songwriters. (Altman responded by pledging to work with artists on issues around rights and compensation.)

In fact, all parties present seemed to largely agree, at least in terms of these generalities, that AI needs a closer look. Altman, for his part, also noted the importance of regulation moving forward to determine just how these powerful AI tools will be developed and employed.

In his opening remarks, Altman referred to AI as a “printing press moment” that could potentially alter the course of world history, while suggesting that “regulatory intervention by governments will be critical to mitigate the risks of increasingly powerful models.” It may seem counter-intuitive for the CEO of a major AI company to support the idea of government regulation and intervention. (Illinois Democratic Sen. Dick Durban called a company coming to Congress to ask for regulation “historic.”) Still, there are several potential explanations for Altman’s point-of-view.

Most obviously (and least cynically), it’s possible he simply agrees that there are a lot of potential downsides to this technology. In recent interviews, Altman has suggested he agrees with other tech leaders – such as his former OpenAI co-founder Elon Musk – that “moving with caution and an increasing rigor for safety issues is really important.” In March, he told CNBC that he’s “a little bit scared” of AI. On Monday night, at a dinner with around 60 lawmakers in Washington, Altman reportedly expanded on this thought, telling them “my worst fear is we cause significant harm to the world.” Perhaps he’s simply voicing his real concerns, as an expert in AI technology.

It’s also possible that Altman just doesn’t believe there’s that much that the US government could really do to hold up AI development at this point, so there’s no reason not to be friendly and ingratiating. First off, this is very complex, cutting-edge technology that most lawmakers don’t fully understand. With new and more powerful tools being developed each day, all over the world, governments may ultimately find they’re better able to regulate AI applications and tools than the technology’s innate development. (The European Union is considering regulations that would apply to certain uses for AI, such as facial recognition, and would also ask companies to conduct their own internal risk assessments.) Most experts agree that real, effective regulation would require its own government agency, staffed with AI experts, which would take some serious time to mandate, organize, and establish.

Altman has also been very careful and studious in how he discusses AI publicly, from a marketing and public relations perspective. Insisting that AI is developing so quickly that it requires our political leaders to step in and thereby save the world once again reinforces the idea that this is groundbreaking software that’s already shifting the world around us. It’s a sales pitch as well as a warning that reinforces the same central theme: this is world-shifting technology that everyone needs to learn about, utilize, and get on board with today or risk being left behind.

Of course, these are also tropes the press is only too happy to pick up on and run with. “Will robots take over your job and/or the world” is a compelling and clickable headline, and with interest in AI already peaking among the public, it’s an easy way to score traffic. It’s unsurprising that the most viral tech phenomenon since “Pokémon Go” is getting a lot of press, particularly when the focus is on Doomsday scenarios.

Plus, by asking Congress to intervene and help regulate AI, Altman presents himself as a responsible steward for the technology with the public’s best interests at heart. When he specifically proposed a potential government agency to set rules around developing AI systems, Republican Sen. John Kennedy of Louisiana suggested that Altman would potentially be the right person to run it himself. So the narrative is already, in some way, taking hold.

To take an even more cynical tack, OpenAI and its products – including DALL-E and the ChatGPT-4 chatbot – are already considered industry leaders. At this point, hitting the brakes on new AI development could potentially help developers that already have a locked-in “first mover” advantage. Potentially slowing everyone down is a much bigger risk if you’re trailing at the tail end of the pack, instead of already being in first place.

Regardless of the specific strategizing behind Altman’s approach, it was undeniably effective in generating positive buzz and press from the hearings. While Congressional tech hearings can frequently be antagonistic – as we’ve recently seen with appearances by leaders from Google, Meta, and TikTok – Altman emerged from his meeting with Senators “unscathed,” enjoying what Axios referred to as a “honeymoon phase” with lawmakers.

Whether the company can maintain that kind of warm relationship with Washington moving forward – particularly if tools like ChatGPT, DALL-E or others really do start influencing elections costing millions of Americans their livelihood – remains to be seen.

- AI Chatbots Aren’t 'Alive.' Why Is the Media Trying to Convince Us Otherwise? ›

- Are ChatGPT and Other AI Apps Politically Biased? And If So, Against Who? ›

- Microsoft Looks to Invest $10B in OpenAI ›

- Is OpenAI as 'Open' as Its Name Suggests? ›

- The Dangers of Lazy Journalism When it Comes to AI - dot.LA ›

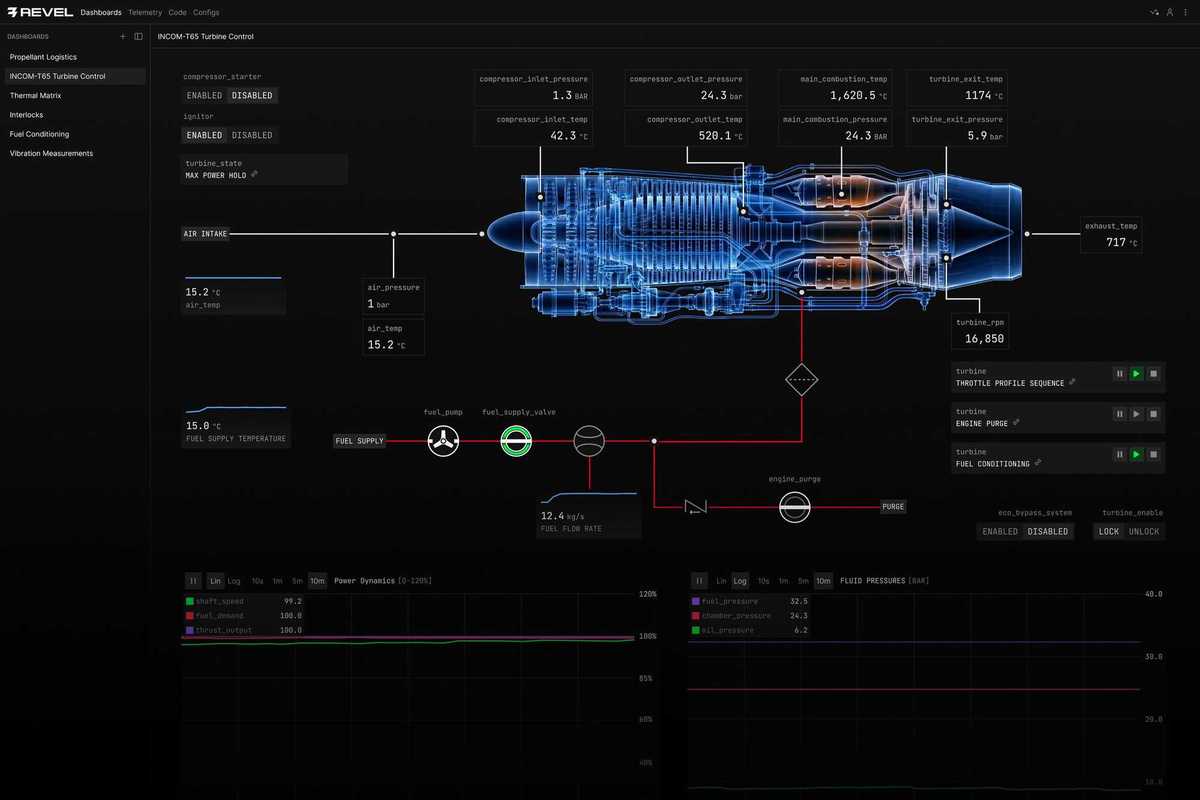

Image Source: Revel

Image Source: Revel