How Two Upcoming SCOTUS Rulings Could Reshape the Internet

This is the web version of dot.LA’s daily newsletter. Sign up to get the latest news on Southern California’s tech, startup and venture capital scene.

Two cases before the Supreme Court this week will reconsider Section 230 of the Communications Decency Act, and could have a broad and far-reaching impact on internet content.

What is section 230?

The Communications Decency Act of 1996 established a number of legal rules and precedents that continue to inform how companies and individuals can permissibly interact online to this day. Essentially, section 230 says that a website – unlike a conventional publisher – can’t be held liable or responsible for anything potentially offensive or illegal that’s posted by an unaffiliated user or a member of the public.

That includes what’s known as “Good Samaritan” protection for companies that own websites or internet platforms. So long as they make a good faith effort to remove obscene, violent, harassing or otherwise objectionable material, internet companies can’t be sued over it as part of a civil proceeding.

Though Section 230 is fairly far-reaching in its application, there have always been a few key exceptions, particularly regarding pre-existing federal and state laws. Websites are still required to remove material that infringes copyrights, material that violates sex trafficking laws, and so on, and they’re not granted total immunity from state criminal laws that may be inconsistent with the federal policy.

In the wake of a number of livestreamed mass shootings, and growing concerns about the spread of election and medical misinformation on internet forums, the law’s far-reaching protections were called into question by several politicians on both sides of the aisle, some of whom suggested potentially amending Section 230 or eliminating it altogether. In his 2020 run for president, Texas Representative Beto O’Rourke campaigned specifically on amending the law to force internet companies to be more proactive in removing hate speech.

Nonetheless, these protections are widely credited with allowing the modern internet to flourish, enabling everything from social networks to search engines to host content, develop recommendation algorithms, and otherwise serve as a hub for public communication without constantly worrying about being sued into oblivion.

So what’s going on with these two court cases?

The cases in front of the Supreme Court this week will take a fresh look at Section 230 protections by considering a relatively extreme edge case scenario: posts made by the militant group the Islamic State, or ISIS, which the United Nations has labeled a terrorist organization.

The first case, Gonzalez v. Google, concerns a series of coordinated ISIS attacks around Paris in 2015 that killed 130 people and wounded 500 more.

23-year-old Nohemi Gonzalez was killed in the 2015 attack at a Parisian bistro. Her family has argued that Google aided ISIS recruitment efforts by allowing the group to post violence-inciting videos to YouTube and specifically featuring ISIS clips in its recommendation algorithms. The Gonzales v. Google decision will deal largely with the recommendation process, and whether internet companies can be held liable for making targeted and unsolicited recommendations of material posted by an outside party. If Google curates the YouTube library and engages in other traditional editorial functions, does that make the company technically a publisher as opposed to simply a “service provider”? (This debate is often summarized as “platform vs. publisher,” but as TechDirt helpfully points out, the term “platform” doesn’t actually appear in Section 230. Internet companies are referred to as “service providers,” a key distinction.)

The second case, Twitter v. Taamneh, centers around a New Year’s Day attack by the group two years later on an Istanbul nightclub, which killed 39. Filed by the family of Nawras Alassaf, a Jordanian who was killed in the 2017 ISIS attack on Istanbul’s Reina nightclub, the lawsuit argues that Twitter and other tech companies knew that their platforms played a significant role in ISIS recruitment efforts, yet did not take aggressive steps to actually remove the organization and its membership from the service.

Why do these cases matter so much?

Both of these cases raise tough and even ambiguous questions about Section 230 and how its protections are specifically applied. Gonzalez v. Google in particular could prove majorly consequential because of the central importance of “recommendation algorithms” to the entire megastructure of the internet.

Algorithmic recommendation doesn’t just power things like YouTube’s “related videos,” it’s also responsible for relevant results in Google’s search engine. A particularly extreme ruling in the Gonzalez case could theoretically find Google liable for objectionable content on any outside website to which it directs users. The lawsuit itself attempts to allay these concerns by differentiating between YouTube recommendations and Google search results. Nonetheless, the distinction highlights just how far-reaching and significant these rulings could be.

The Twitter ruling is likely narrower, and will attempt to establish what steps a website or platform needs to take in order to “aggressively” combat objectionable content, such as terrorism recruitment. There are a number of open questions here to potentially confront, in terms of what actually constitutes “aiding and abetting” a terrorist organization.

For example, if a court agrees that Twitter provided a gathering place or recruitment tool for ISIS, can the company be held responsible for terrorist acts that weren’t specifically plotted or discussed there?

If ISIS used Twitter to recruit, can Twitter be sued for a later ISIS attack, even if it wasn’t specifically planned out using Twitter, and the company had no way of knowing what they were even working on?

The Biden administration has argued that Twitter could theoretically be held liable in certain circumstances, even if the company didn’t specifically know about the terrorist attack being plotted, and did not host specific discussions in support of that attack. However, the Department of Justice added that it feels the plaintiffs in Twitter v. Taamneh have not gone far enough in demonstrating the company’s specific support for ISIS beyond the “generalized support” they offer to all users. The Taamneh family argues that the Anti-Terrorism Act was written to provide plaintiffs with “the broadest possible basis” to sue companies that assist terrorist groups.

Twitter, for their part, has suggested that – by reconsidering the definition of “aiding-and-abetting” terrorism – the case poses a particularly slippery slope, which could result in even aid organizations and NGOs being liable if their assistance or services accidentally ends up aiding ISIS operations.

Looking Ahead

Even if these two cases don’t result in a major change to legal precedent regarding Section 230, there are more potential challenges to the law coming in the months and years ahead. In 2022, a surprise ruling by the Fifth Circuit Court of Appeals banned apps and website from moderating content based on “viewpoint,” which could have major implications for free speech and content curation moving forward. There are no easy answers when it comes to communication and publishing online. Which is why we’re probably going to be having these kinds of arguments for a long time.

- Jury Rules in Favor of FIGS, Tosses Out False Advertising Lawsuit ›

- How Social Media Moderation Might Be Legislated After the Capitol Attack ›

- A Lawsuit Blames ‘Defective’ TikTok Algorithm for Children’s Deaths ›

- Weekly Tech Roundup: The SCOTUS Case That Could Fundamentally Transform the Way Content Is Regulated ›

- SCOTUS's Ruling on Section 230 Could Alter Social Media - dot.LA ›

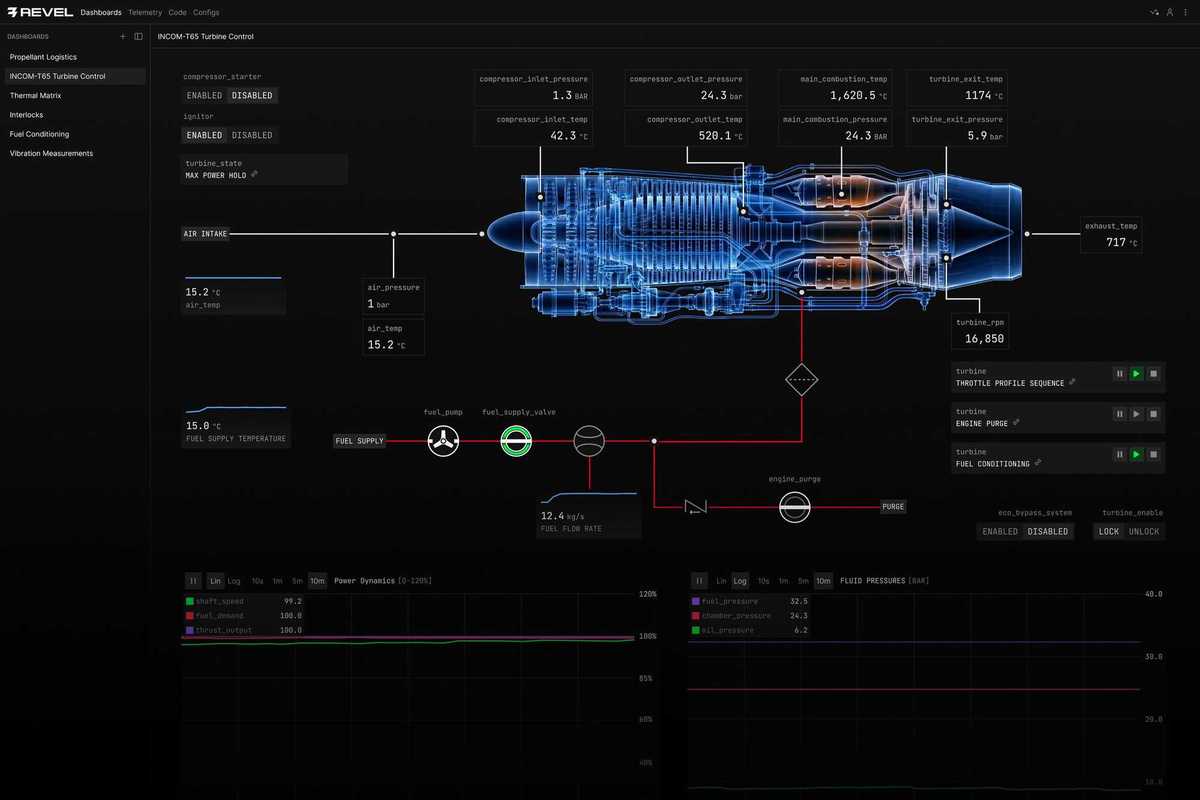

Image Source: Revel

Image Source: Revel