TikTok Potentially Faces $29 Million Fine Over Child Data Privacy

Kristin Snyder is dot.LA's 2022/23 Editorial Fellow. She previously interned with Tiger Oak Media and led the arts section for UCLA's Daily Bruin.

TikTok may face a $29 million fine in the United Kingdom.

According to the New York Times, British regulators from the Information Commissioner’s Office (ICO) sent the video-sharing app a warning about how the company handled its young user’s data. The notice, which indicates that the data protection agency plans to hand out a fine, claims that TikTok did not receive parental permission to handle children’s information, illegally processed sensitive details and did not make its data policies understandable to children.

These requirements are due to the Children’s Code, a set of standards that aim to protect children’s data. Web servers likely to be accessed by children, such as social media websites and gaming platforms, have to be designed with young users in mind. The code requires high privacy settings and denies company’s the ability to track young users’ precise locations. The ICO recently said it was investigating how 50 online platforms have complied with the year-old policy.

Thomas Germain, a Consumer Reports writer specializing in privacy issues, says regulation is difficult because these companies are set up to collect data, not protect people’s privacy.

“I would expect that we're just going to see companies violating this law over and over, especially in the beginning, because it's such a big shift to turn around,” Germain tells dot.LA.

While Germain doesn’t believe that the Children’s Code will likely impact how companies function in America, it does speak to the growing concerns around data privacy in the U.S. In the past, however, social media platforms like TikTok have mitigated these concerns by making country or even state-specific changes. For example, across the United States, Snapchat limits certain filters in different states. Alternatively, Germain says TikTokcould make broad changes to the app at large in anticipation of similar legislation elsewhere. YouTube made changes to its video uploading policies ahead of the U.K.’s legislation.

The Children’s Code served as the basis for the California Age-Appropriate Design Code Act, which Gov. Gavin Newsom signed into law earlier this month. Taking effect in 2024, the bill requires web servers to make high-privacy settings the default and write privacy policies in accessible language. In addition, companies would not be able to target young users or use their information in harmful ways. Companies that intentionally violate the act can face fines of up to $7,500 per affected child.

Legislation meant to regulate large tech companies can often take some time to actually have an impact, Germain says. As data privacy is still a relatively new concern in American legal systems, he says many wide-scale companies have not taken measures to enact changes.

“The way that these things generally go is regulators pass a law, and then they have to fine people over and over and over to get the industry to start complying with the rule,” he says.

With that said, this isn’t TikTok’s first time facing pressure over child data privacy. Back when TikTok was still Musical.ly, the company was fined $5.7 million for collecting data from users under the age of 13—violating the Children's Online Privacy Protection Act (COPPA). Alleged COPPA violations popped up again in 2020 when a coalition of child privacy protection advocacy groups filed a complaint with the U.S. Federal Trade Commission. The groups claimed that the app was not doing enough to protect young users and alert parents of how their children’s data was used.

Nonetheless, using fines to deter companies from changing their behavior has long been a futile measure to institute reform. Since, according to Germain, major companies like TikTok have the means to pay them. For a law to truly make a difference, Germain says it would likely have to include a fine so massive that even major corporations couldn’t feasibly pay them.

“They're making so much profit that they can afford [fines],” he says. “It's not really going to do enough in the long term to change industry-wide behavior, and I think that's a big part of the issue.”

- Snap Reaches $35M Settlement on Its Use of Biometric Data - dot.LA ›

- A Breakdown of the Data TikTok Collects on American Users - dot.LA ›

- TikTok is Laying off Staff Across the Company in the U.S. - dot.LA ›

Kristin Snyder is dot.LA's 2022/23 Editorial Fellow. She previously interned with Tiger Oak Media and led the arts section for UCLA's Daily Bruin.

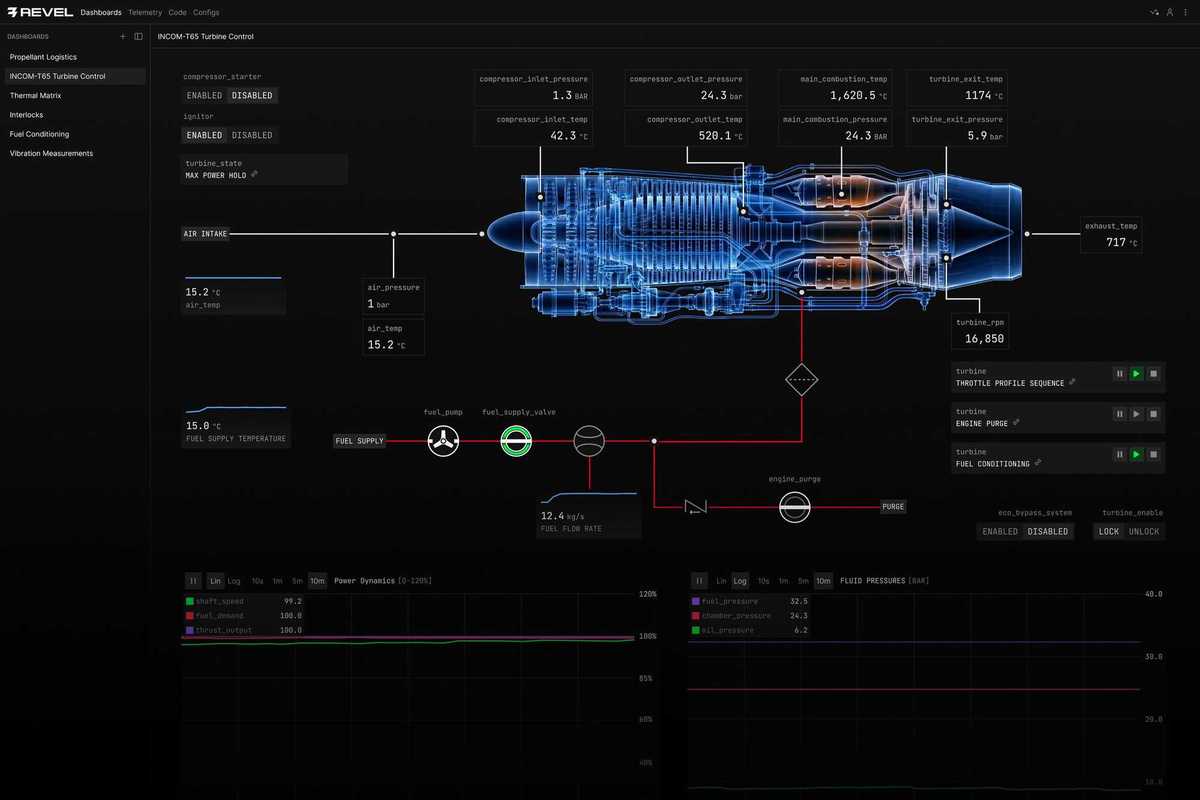

Image Source: Revel

Image Source: Revel