This is the web version of dot.LA’s daily newsletter. Sign up to get the latest news on Southern California’s tech, startup and venture capital scene.

Hey there, welcome to 2023! It's the future now, which means it's time we took a long, hard look at what's going on with AI. Because all of a sudden, it's everywhere, at least in terms of the general public's understanding and access to it.

And no, I'm not just talking about NSFW AI-rendered avatars, though it will never stop being hilarious that this is how whatever is left of humanity will remember the dawn of the machine age.

But with time literally speeding up, the perviness of yesterday's artificial intelligentsia is already old news, along with breaking updates about AI stealing from artists, doing your kids' homework, displacing Google and taking away jobs not from the expected technical and manual labor sectors, but from the previously unthinkable field of creatives.

So look: We can debate the ethics of using AI in artistic (or editorial!) endeavors. The validity of copyright claims, both for the artists whose works have been used in deep learning training sets and the AI-generated art itself. The liability of companies that purposefully bury release claims in the terms of service agreements.

The impossible hotel that sits in a deserted beach during a bright sunrise. The building’s architectural look like boat sails, photorealistic, high def. (Dall-E)

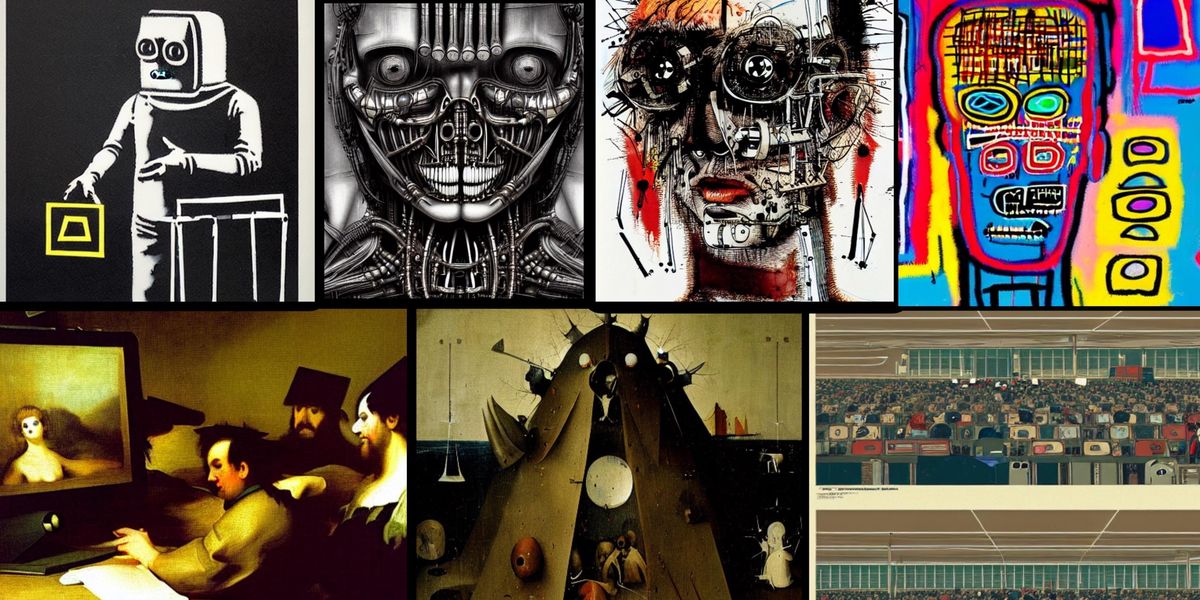

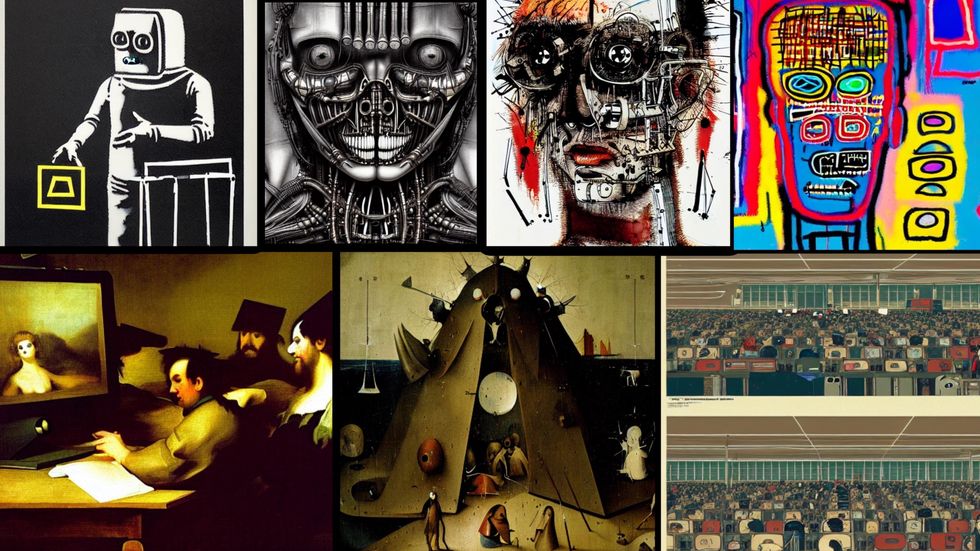

The Argument Against AI in the Arts, By AI

But we also need to take a step back and realize what a remarkable tool we've just been handed. It has become, in the space of the holiday break, the most exciting and necessary piece of coding I've used in the 15+ years I've worked on the Internet. There is no going back.

Dall-E 2, created by OpenAI, has technically been around since January 2020, it didn't go public until September of last year. Using a deep learning neural network and drawing from a vast data set from the internet, Dall-E generates images from textual descriptions. You can get highly specific with your prompts-- if you dream it, Dall-E can build it.

But Dall-E 2 wasn't perfect: it was stumped on visualizing both words (which came out looking like made-up Russian) and, for some reason, human faces (which tended to look like terrifying nightmare vortexes.) The implications of Dall-E were vast, but on a practical level, it was hard to see how this could replace the need for human art.

Then, very quickly--over the course of a few months, maybe even weeks--all AI hell broke loose. Suddenly, AI-rendered images were winning state art fairs. DeviantArt, the largest online art gallery, announced that it was creating its own AI generator, causing an immediate backlash as users realized the AI had been trained on their work. A few weeks later, the whole Lensa mishegoss. And in our rush to Google how AI art produced such high-quality images in mere seconds, the answers just begot more confusion.

For instance: Stable Diffusion is a proprietary type of AI generator, not to be confused with a stable diffusion, a repeatable training model used by some deep-learning AI generators. In those stable models, diffusers add random Gagosian noise particles--like TV static--that "destroy" the original image, forcing the AI to reverse engineer pictures from a series of random dots, which will eventually lead it to conjure images from seemingly thin air.

Then there are the programs themselves: the biggies are Dall-E 2 (which works off a browser, allows you to edit images and add matching generational frames based on text), MidJourney (the one that runs off of Discord and works both on a free and monthly subscription model), Stable Diffusion (the open source, free "ethical" one) and Google's DreamFusion (the one that makes 3D art). Then there's a host of other apps and programs, like Nightcafe, Jasper, Starryai, Fotor (the one that lets you make NFTs, though why you'd want to is another question), Deep Dream Generator, StableCog.

There are more apps currently offering AI photo editing and image generation and avatar creators than there are any other types of AI applications. There's a reason Lensa was the gateway drug: it was inevitable that our first practical, everyday use for Artificial Intelligence would be posting cute pics on the 'gram. And yes, this leads to some thorny issues: legally, ethically, morally, philosophically. But why is that a bad thing? The internet did the same thing, and no one is telling us to avoid using that.

It's the "tool vs weapon" idea from the 2016 sci-fi/thriller "Arrival": that the two concepts are interchangeable, depending on your vantage point, that in some languages they're the same word. People look at AI art and see a list of reasons to be concerned for the future of (quite literally) Art in the Humanities. I look at deep learning models and see limitless potential, the miracle of artistic expression, instantaneously. It has made me more curious about the world, inspired me, and allowed me to tackle problems under a different paradigm that would never have been possible a few months ago.

Results vary, and you really have to play around with how you phrase your prompts: some require highly detailed descriptions to render what you want, while others work best on the "less is more" approach.

The coolest part of AI is that it can adapt, learn, respond. It can hold conversations, in the way the best artists can. You can pose your concerns about the validity of AI in the arts as a prompt, and it will come back at you with some really interesting answers.

The header image was made with various prompts for "Visualizing the argument against AI in the Arts." Just on a meta-level, these images make for a strong argument against AI from a copyright perspective, appropriating the style of famous artists to create entirely original images.

We can--and should!--have existential arguments about the nature of Art: whether it's defined by its production or the final product; if a medium can be entirely disqualified because it's "cheating." There's a long road of legal precedents to be set here by the courts, but on aesthetic grounds, are we saying it's not Art if it was (in part) created by a What and not a Who?

Are we ready to disqualify Pixar films on the same grounds?

Because here's the thing: AI cannot generate itself (at least not yet). Humans are still needed to come up with the core concept, and beyond that, to refine and tweak and spend some hours extrapolating terminology that will make AI programs produce the images they want. AI learns, but it cannot imagine.

I am not worried about losing my job to AI. If what I contribute can be condensed down to a single, reusable prompt or a line of running code, then that's a me-problem. We're more than just a well-executed prompt or code. If that's what you see when you look at ChatGPT or Dall-E 2, then I suggest you spend some more time exploring them…and yourself. - Drew Grant

LA in 2028: A Look at Transformational Tech in the City

SmartLA 2028--Mayor Garcetti's initiative to (among other things) break Los Angeles out of its dependency on fossil fuels and switch to mass transit --is still in the works. Here's what the future might look like for the city.

What We’re Reading...

- How cutting-edge technology created the underwater sequences in 'Avatar II." Because if there's one thing James Cameron loves more than space, it's the ocean.

- Artists can still utilize Web3.0 for authentic fan experiences using the blockchain, argues Forbes. Maybe just stay away from crypto and NFT.

- How we can recover from the "lost decade" of bad tech.

- -

How Are We Doing? We're working to make the newsletter more informative, with deeper analysis and more news about L.A.'s tech and startup scene. Let us know what you think in our survey, or email us!